Use Cilium to provide networking services to containers inside Oracle Container Engine for Kubernetes - OKE -

When you deploy a new Kubernetes Cluster using the Oracle Kubernetes Engine (OKE) the default CNI plugin that is installed is the VCN-Native plugin. With Cloud Native Computing you are flexible in choosing your method of providing network (security) services to your container platform by just choosing another CNI plugin. In this tutorial, we are going to deploy a new Kubernetes Cluster using the Oracle Kubernetes Engine (OKE) with the Flannel CNI plugin and we are going to change this for the Cilium CNI plugin. Cilium offers another network (security) features compared to the VCN-Native plugin and Flannel.

The Steps

- STEP 01: Deploy a Kubernetes Cluster (using OKE custom create)

- STEP 02: Install Cilium as a CNI on the OKE deployed Kubernetes Cluster

- STEP 03: Deploy a sample application

- STEP 04: Configure Kubernetes Services of Type NetworkPolicy

- STEP 05: Removing the sample application and Kubernetes Services of Type NetworkPolicy

- STEP 06: Deploy a sample application and configure Kubernetes Services of Type LoadBalancer (and clean up again)

STEP 01 - Deploy a Kubernetes Cluster using OKE custom-create

In [this] article you can read about the different OKE deployment models you can choose from.

The flavors are as follows:

- Example 1: Cluster with Flannel CNI Plugin, Public Kubernetes API Endpoint, Private Worker Nodes, and Public Load Balancers

- Example 2: Cluster with Flannel CNI Plugin, Private Kubernetes API Endpoint, Private Worker Nodes, and Public Load Balancers

- Example 3: Cluster with OCI CNI Plugin, Public Kubernetes API Endpoint, Private Worker Nodes, and Public Load Balancers

- Example 4: Cluster with OCI CNI Plugin, Private Kubernetes API Endpoint, Private Worker Nodes, and Public Load Balancers

I have chosen to go for the Example 1 deployment model in this example. I have previously explained how to deploy Example 3 in [this article].

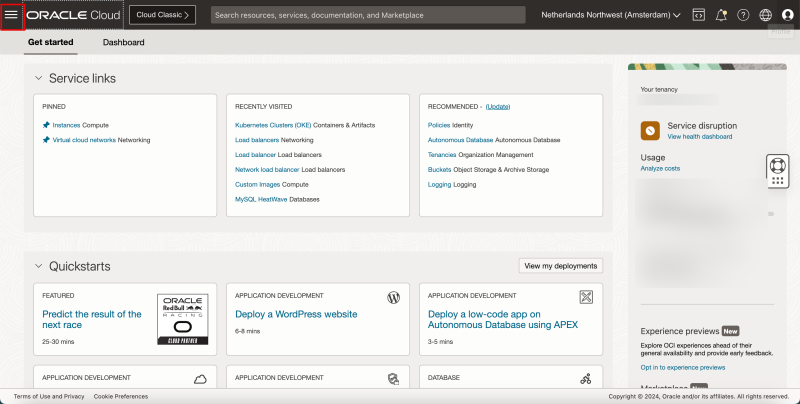

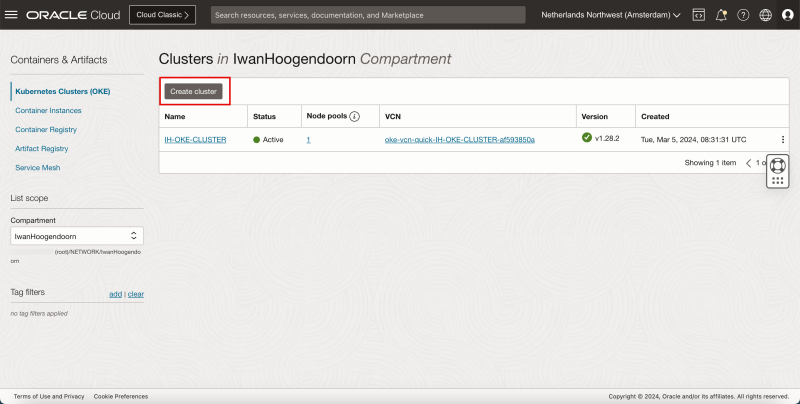

- Click the hamburger menu.

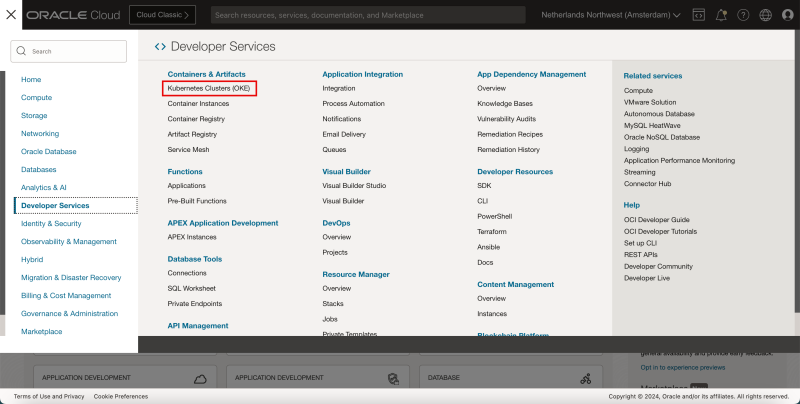

- Click on Developer Services.

- Click Kubernetes Clusters (OKE).

- Click on Create Cluster.

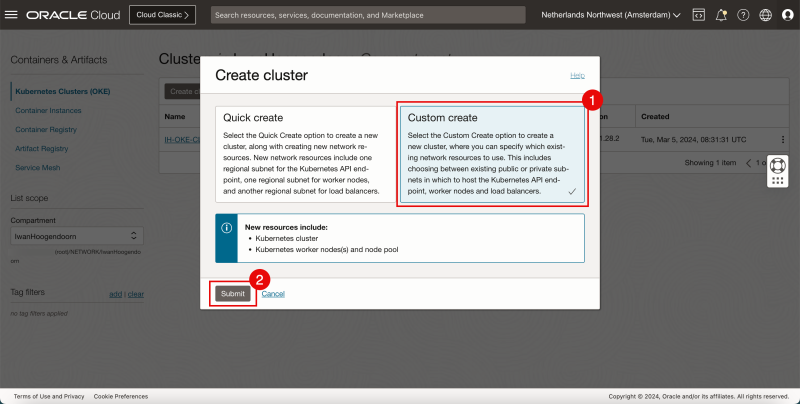

- Select custom create.

- Click on Submit.

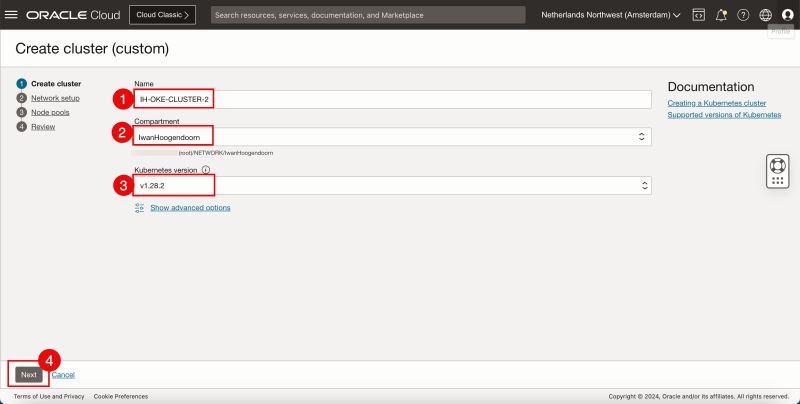

- Enter a Cluster Name.

- Select a Compartment.

- Select the Kubernetes version.

- Click on Next.

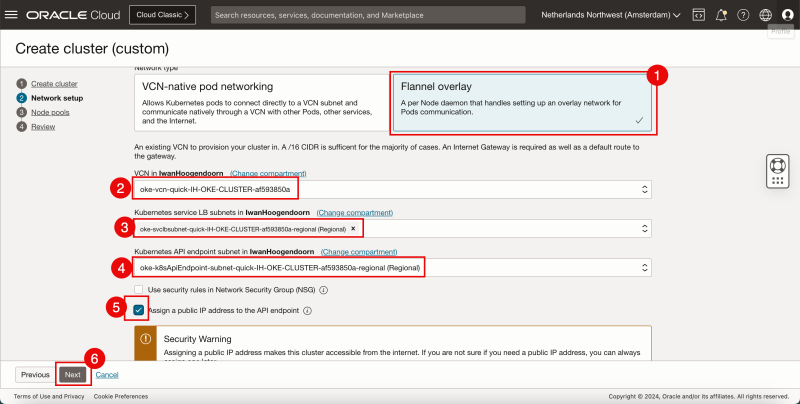

- Select Flannel overlay as the network type.

- Select a VCN that you want to use to deploy the new Kubernetes Cluster.

- Make sure you have created a VCN before this step, this is explained [here].

- Select a subnet (inside the VCN you just selected) to provide IP addresses to the Kubernetes Services.

- Make sure you have created a Subnet before this step, this is explained [here].

- Select a subnet (inside the VCN you just selected) to provide IP addresses to the Kubernetes API Endpoints.

- Make sure you have created a Subnet before this step, this is explained [here].

- Select Assign a public IP address to the API endpoint.

- Click on Next.

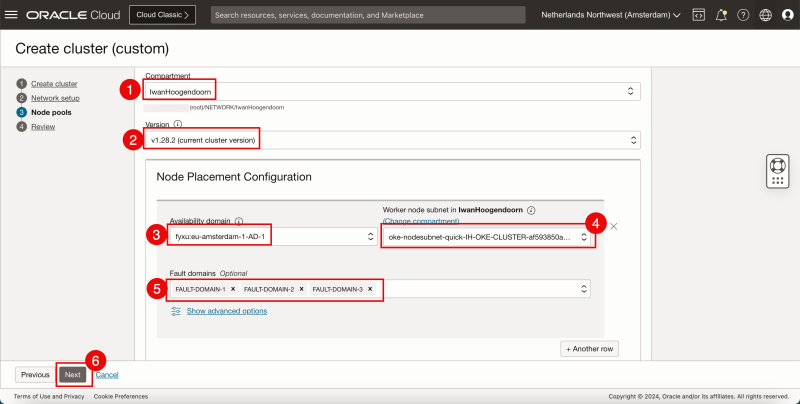

For the node pool (Worker Nodes), you can select different settings, but we are going to try to keep them the same.

- Select a Compartment.

- Select the Kubernetes version.

- Select an Availability Domain.

- Select a subnet (inside the VCN you just selected) to provide IP addresses to the Kubernetes Worker Nodes.

- Make sure you have created a Subnet before this step, this is explained [here].

- Select the Fault Domains you want to make use of to place your worker nodes in.

- Click on Next.

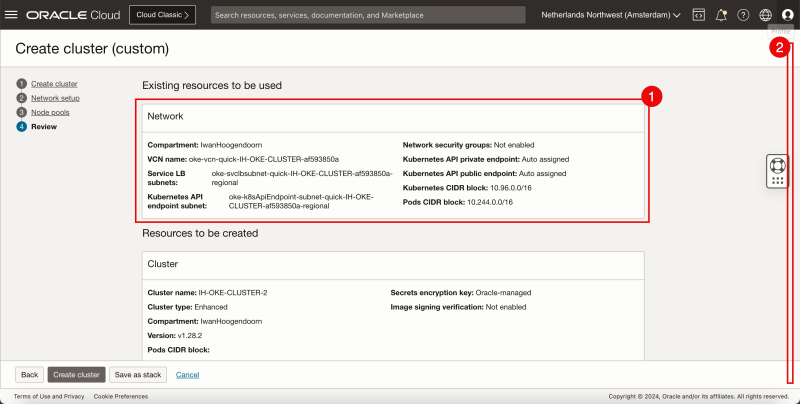

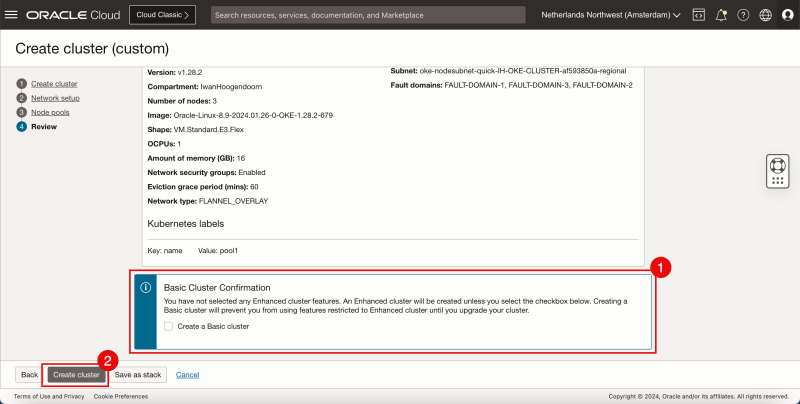

- Review the Network parameters.

- Scroll down.

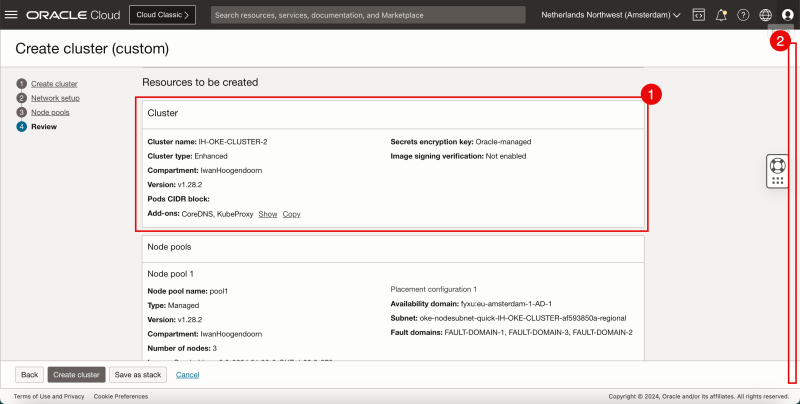

- Review the Cluster parameters.

- Scroll down.

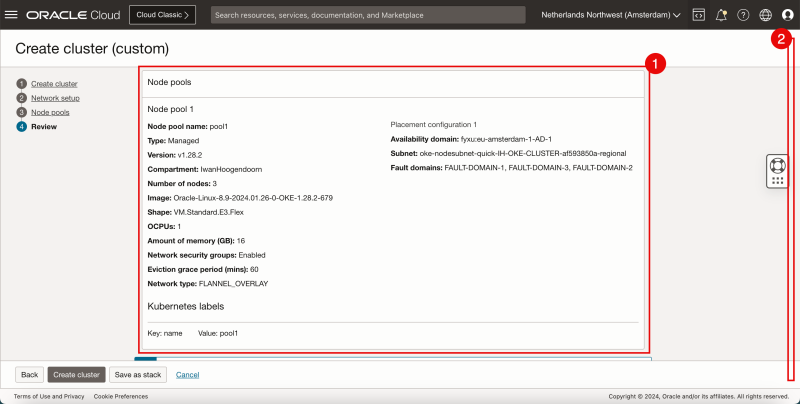

- Review the Node Pools parameters.

- Scroll down.

- Do NOT select to create a Basic Cluster.

- Click on Create cluster.

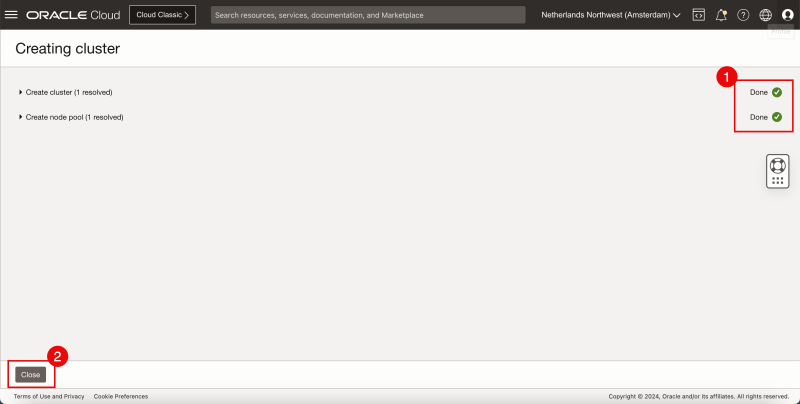

- Review the status of the different components that are created.

- Click on Close.

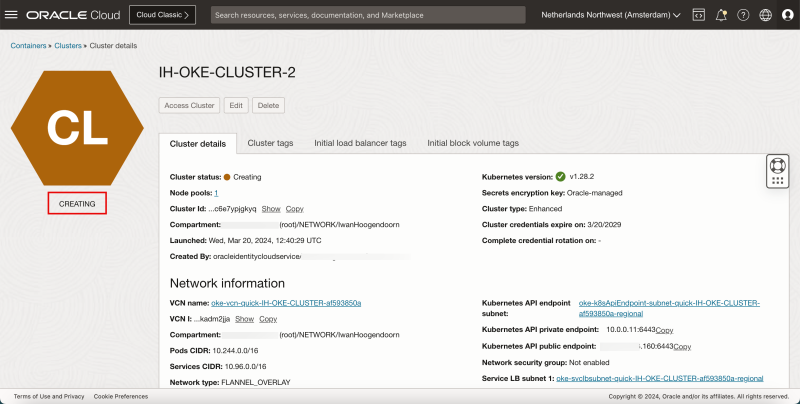

- Review that the status is CREATING.

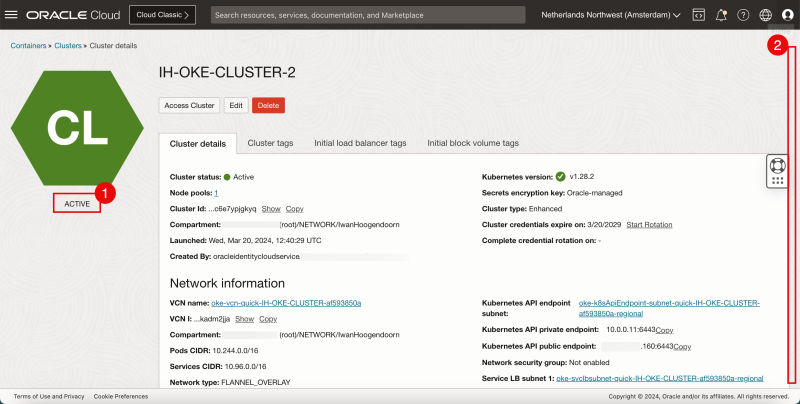

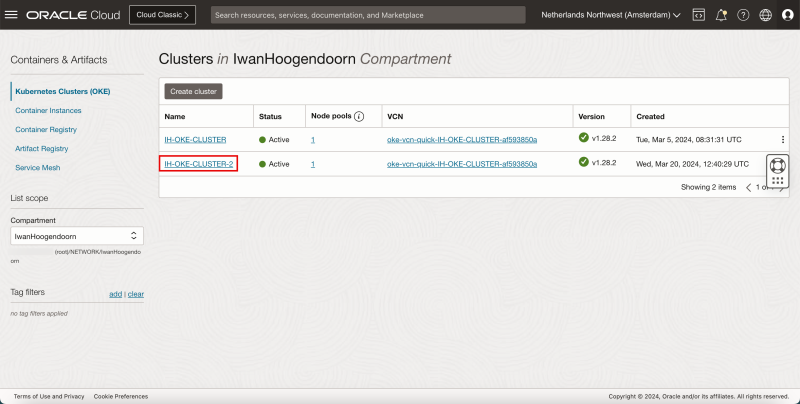

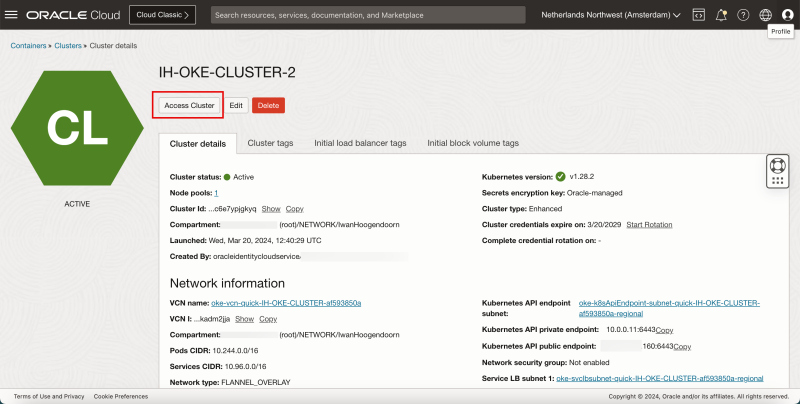

- Review that the status is ACTIVE.

- Scroll down.

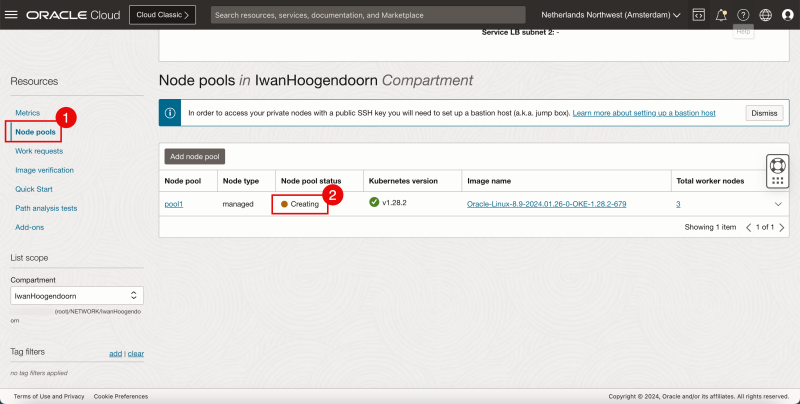

- Click on Node Pools.

- Notice that the (Worker) Nodes in the pool are still being created.

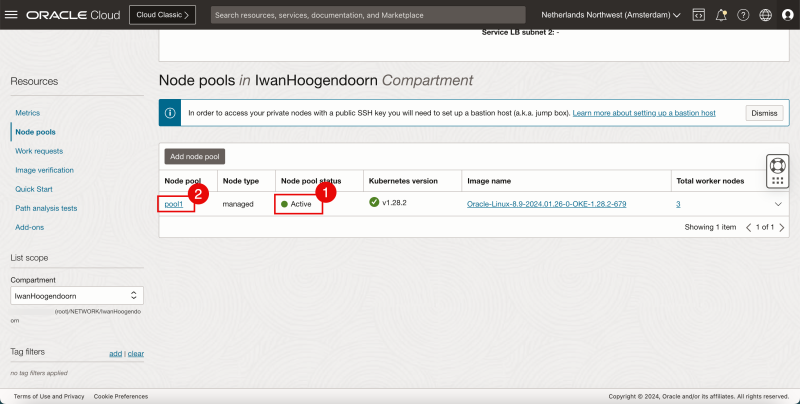

- After a few minutes notice that the (Worker) Nodes in the pool are active.

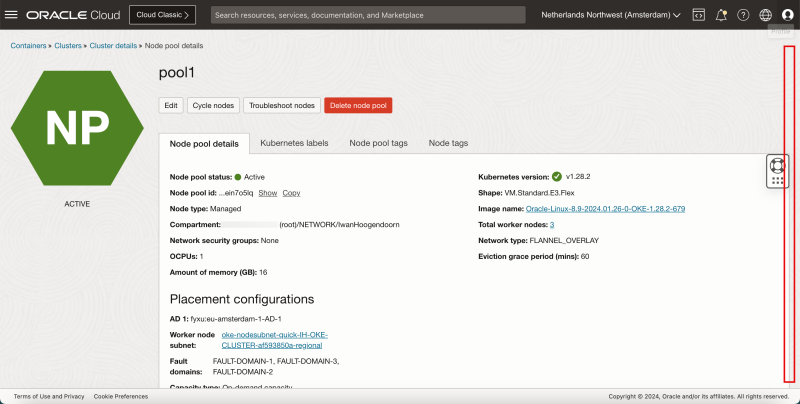

- Click on the Node Pool name.

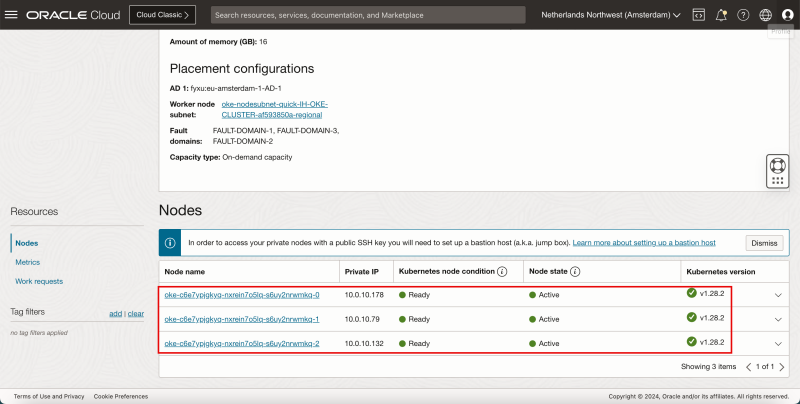

- Scroll down.

- Notice that all Nodes are Ready and Active.

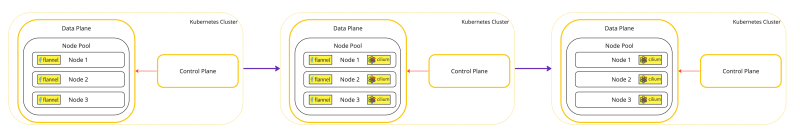

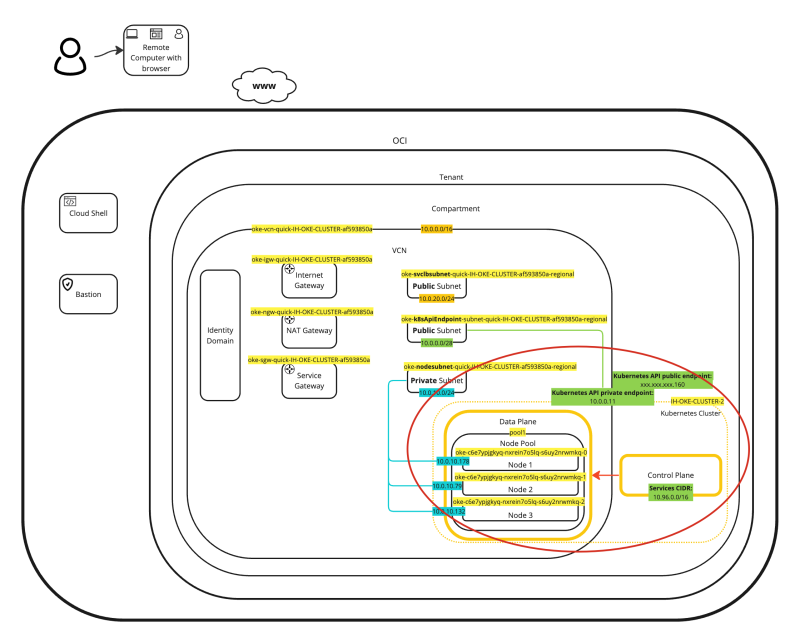

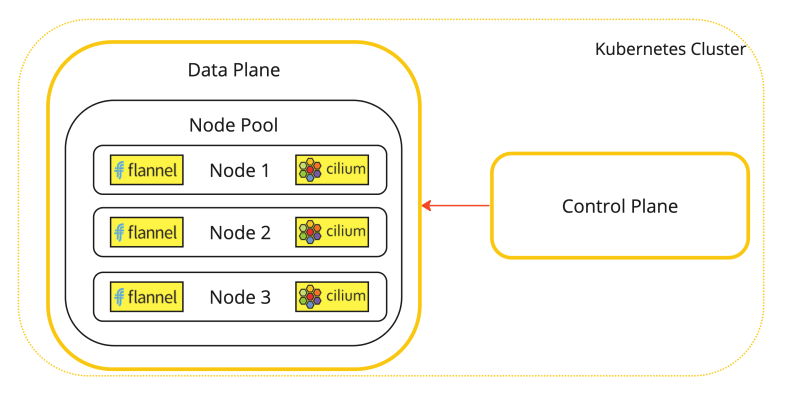

A visual representation of this deployment can be found in the diagram below.

- Go back to the OKE overview page: Developer Services > Kubernetes Clusters (OKE). - Click on the newly deployed Kubernetes Cluster.

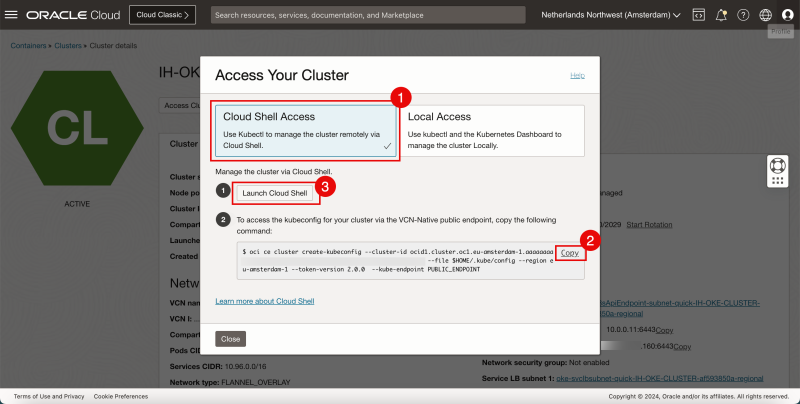

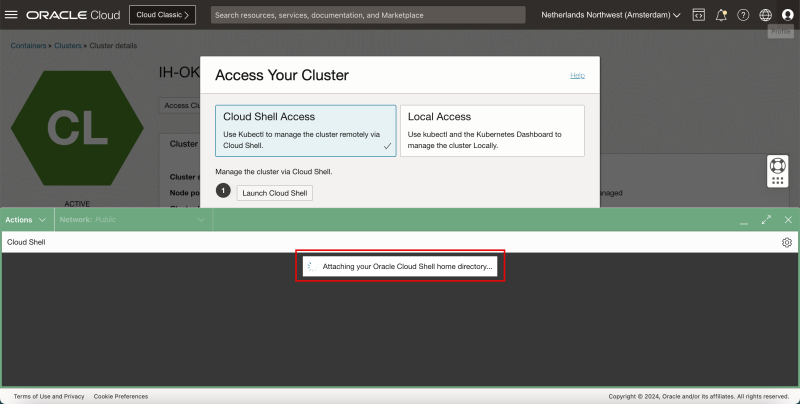

- Click on Access Cluster.

- Select Cloud Shell Access.

- Click on Copy to copy the command to allow access to the Kubernetes Cluster.

- Click on Launch Cloud Shell.

- The Cloud Shell will now start. - Some informational messages will be shown on what is happening in the background.

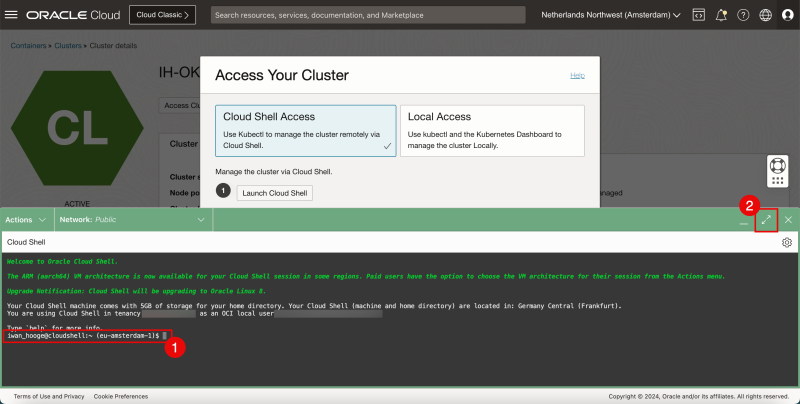

- Notice that you have Shell Access.

- Click on Maximise to make the Cloud Shell window larger.

- Paste in the command that was copied before.

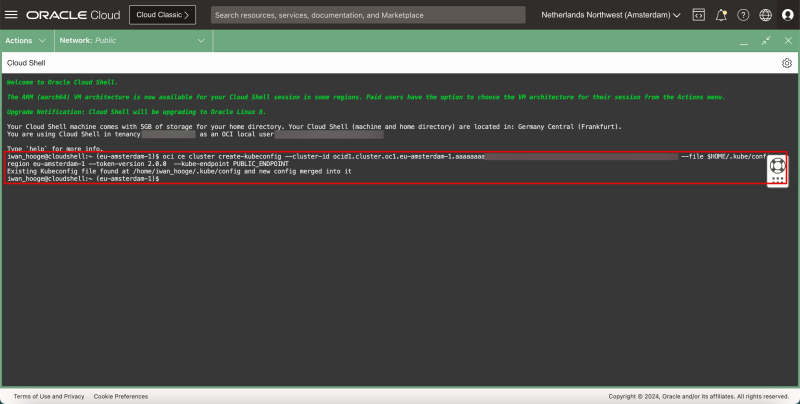

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ oci ce cluster create-kubeconfig --cluster-id ocid1.cluster.oc1.eu-amsterdam-1.aaaaaaaaXXX --file $HOME/.kube/config --region eu-amsterdam-1 --token-version 2.0.0 --kube-endpoint PUBLIC_ENDPOINT

Existing Kubeconfig file found at /home/iwan_hooge/.kube/config and new config merged into it

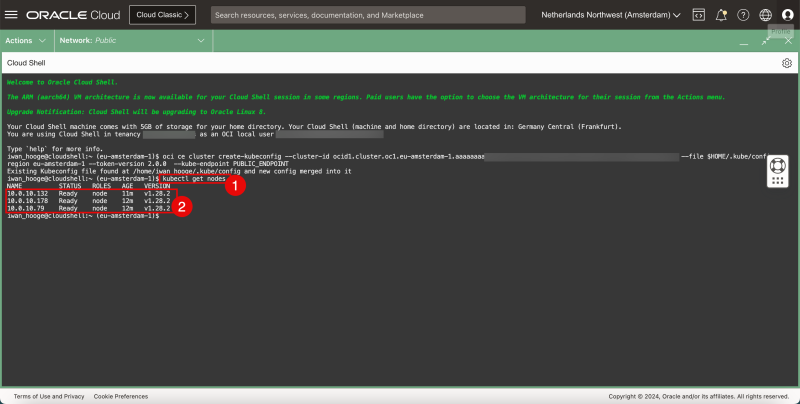

- Issue the following command to gather information about the Worker Nodes:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.0.10.132 Ready node 11m v1.28.2

10.0.10.178 Ready node 12m v1.28.2

10.0.10.79 Ready node 12m v1.28.2

- Review the deployed worker nodes.

STEP 02 - Install Cilium as a CNI on the OKE-deployed Kubernetes Cluster

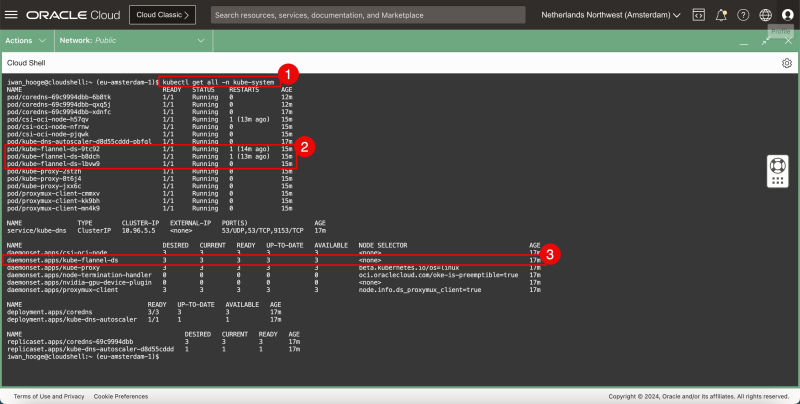

1. Issue the following command to verify the currently deployed CNI plugin (Flannel):

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get all -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-69c9994dbb-6b8tk 1/1 Running 0 12m

pod/coredns-69c9994dbb-qxq5j 1/1 Running 0 12m

pod/coredns-69c9994dbb-xdnfc 1/1 Running 0 16m

pod/csi-oci-node-h57qv 1/1 Running 1 (12m ago) 14m

pod/csi-oci-node-nfrnw 1/1 Running 0 14m

pod/csi-oci-node-pjqwk 1/1 Running 0 15m

pod/kube-dns-autoscaler-d8d55cddd-pbfql 1/1 Running 0 16m

pod/kube-flannel-ds-9tc92 1/1 Running 1 (13m ago) 14m

pod/kube-flannel-ds-b8dch 1/1 Running 1 (12m ago) 14m

pod/kube-flannel-ds-lbvw9 1/1 Running 0 15m

pod/kube-proxy-2stzh 1/1 Running 0 15m

pod/kube-proxy-8t6j4 1/1 Running 0 14m

pod/kube-proxy-jxx6c 1/1 Running 0 14m

pod/proxymux-client-cmmxv 1/1 Running 0 15m

pod/proxymux-client-kk9bh 1/1 Running 0 14m

pod/proxymux-client-mn4k9 1/1 Running 0 14m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.96.5.5 <none> 53/UDP,53/TCP,9153/TCP 16m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/csi-oci-node 3 3 3 3 3 <none> 16m

daemonset.apps/kube-flannel-ds 3 3 3 3 3 <none> 16m

daemonset.apps/kube-proxy 3 3 3 3 3 beta.kubernetes.io/os=linux 16m

daemonset.apps/node-termination-handler 0 0 0 0 0 oci.oraclecloud.com/oke-is-preemptible=true 16m

daemonset.apps/nvidia-gpu-device-plugin 0 0 0 0 0 <none> 16m

daemonset.apps/proxymux-client 3 3 3 3 3 node.info.ds_proxymux_client=true 16m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/coredns 3/3 3 3 16m

deployment.apps/kube-dns-autoscaler 1/1 1 1 16m

NAME DESIRED CURRENT READY AGE

replicaset.apps/coredns-69c9994dbb 3 3 3 16m

replicaset.apps/kube-dns-autoscaler-d8d55cddd 1 1 1 16m

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

2. Notice that Flannel is deployed as a pod.

3. Notice that Flannel is deployed as a service.

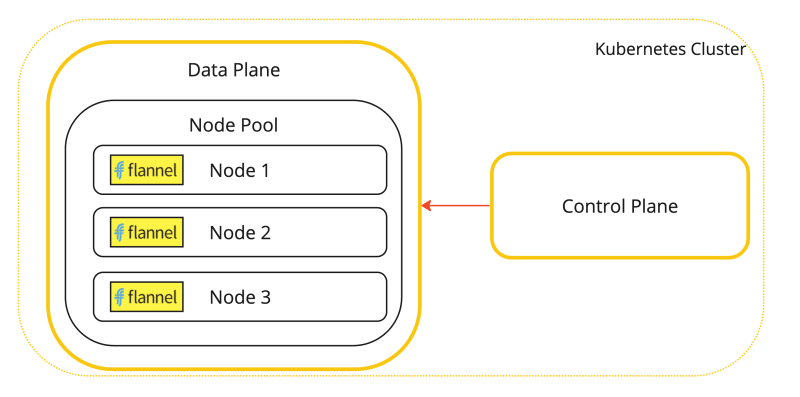

This is a visual representation of the Kubernetes worker nodes with the Flannel CNI installed.

Let's now install Cilium.

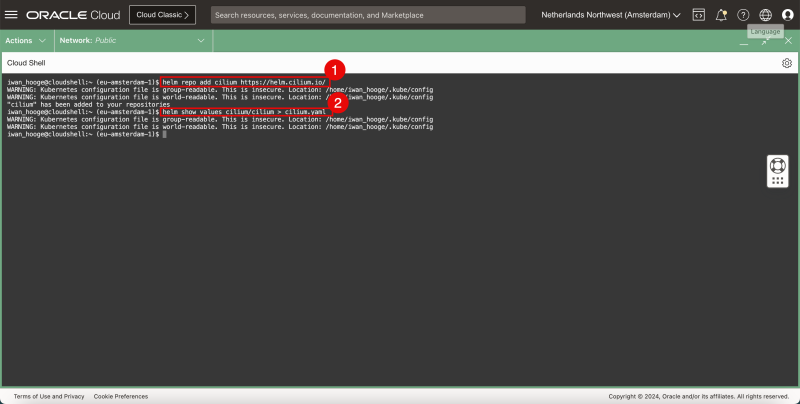

1. Issue the following command to add the cilium repository:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ helm repo add cilium https://helm.cilium.io/

WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /home/iwan_hooge/.kube/config

WARNING: Kubernetes configuration file is world-readable. This is insecure. Location: /home/iwan_hooge/.kube/config

"cilium" has been added to your repositories

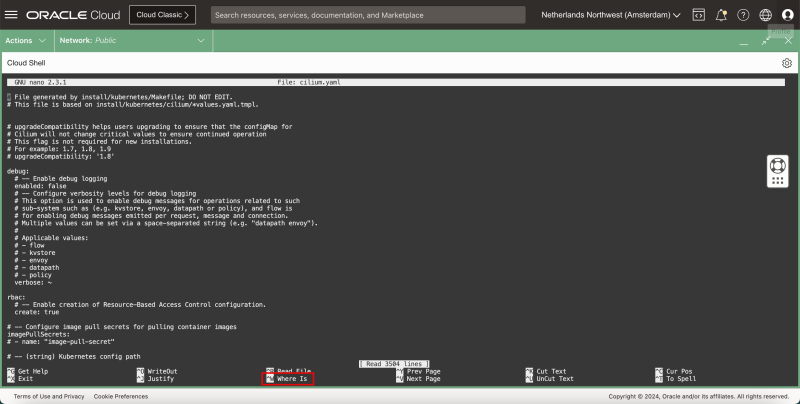

2. Issue the following command to generate the cilium deployment YAML file:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ helm show values cilium/cilium > cilium.yaml

WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /home/iwan_hooge/.kube/config

WARNING: Kubernetes configuration file is world-readable. This is insecure. Location: /home/iwan_hooge/.kube/config

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

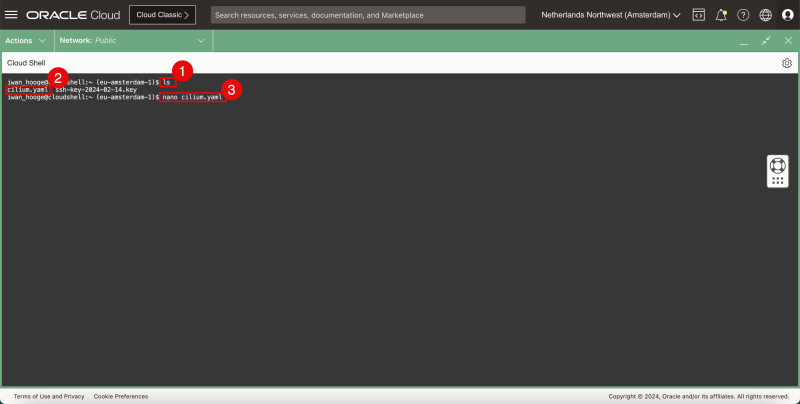

1. Issue this command to confirm if the Cilium YAML file is present:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ ls

cilium.yaml ssh-key-2024-02-14.key

2. Confirm that the YAML file is present.

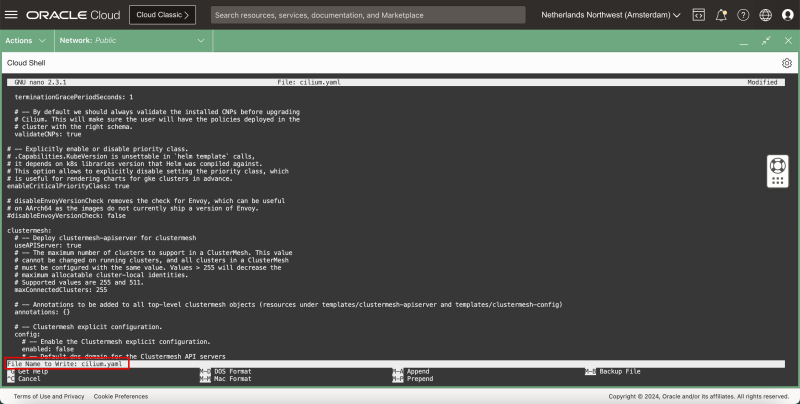

3. Issue this command to edit the YAML file with the nano editor:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ nano cilium.yaml

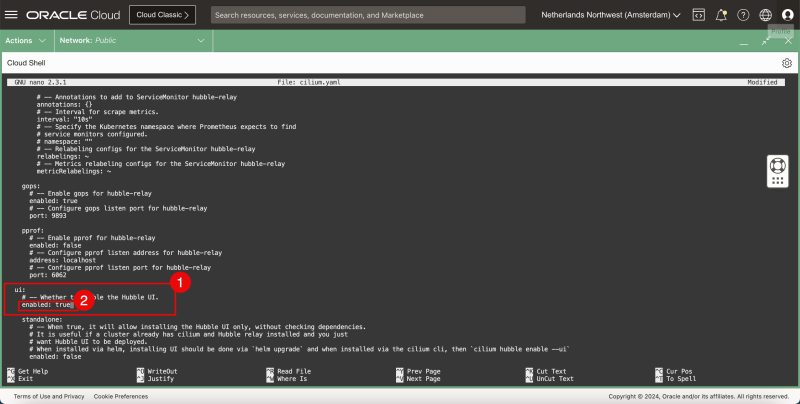

The YAML file is a large file with a lot of settings, scroll through the file to find the following settings, and change the following settings where required:

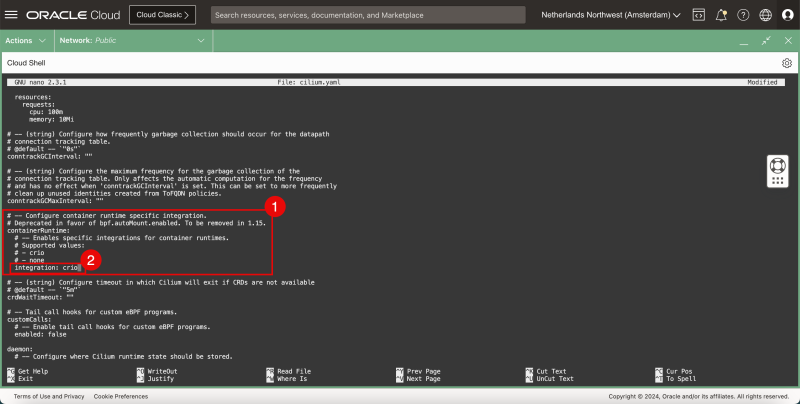

containerRuntime:

integration: crio

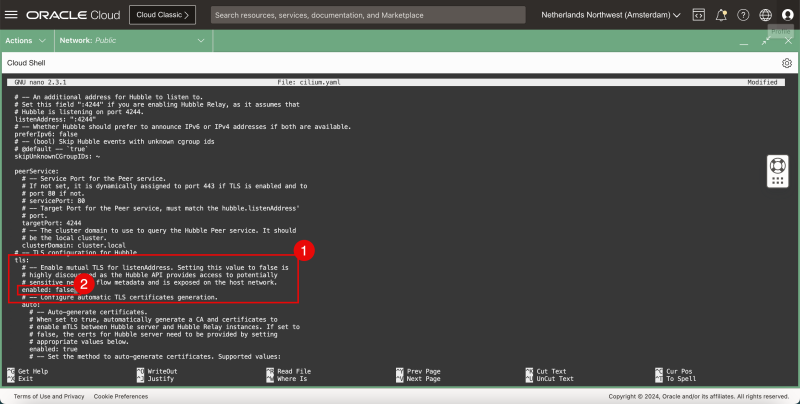

hubble:

tls:

enabled: false

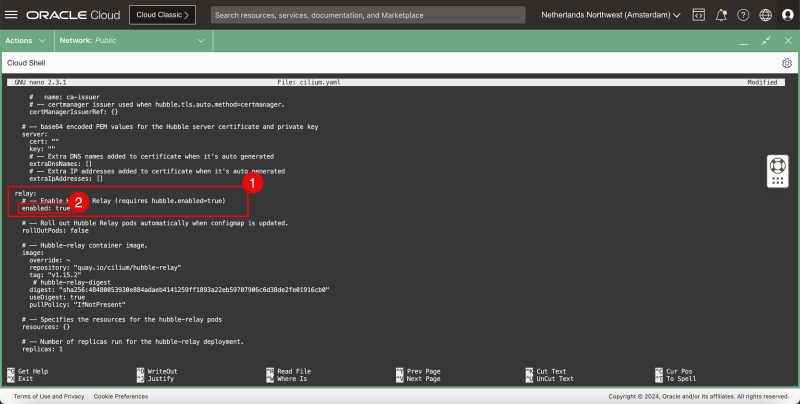

hubble:

relay:

enabled: true

hubble:

ui:

enabled: true

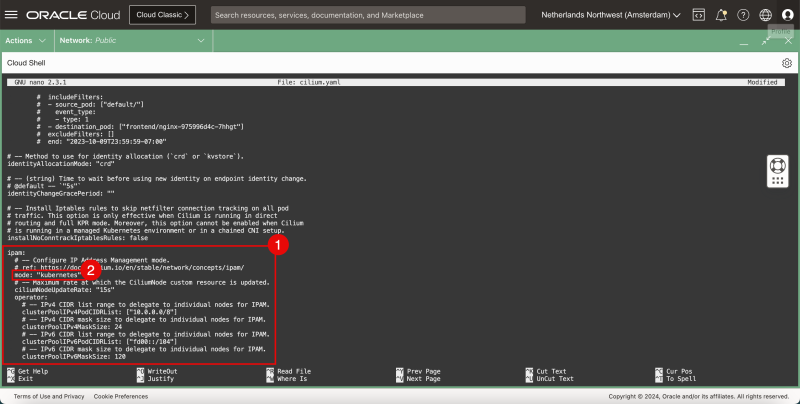

ipam:

mode: "kubernetes"

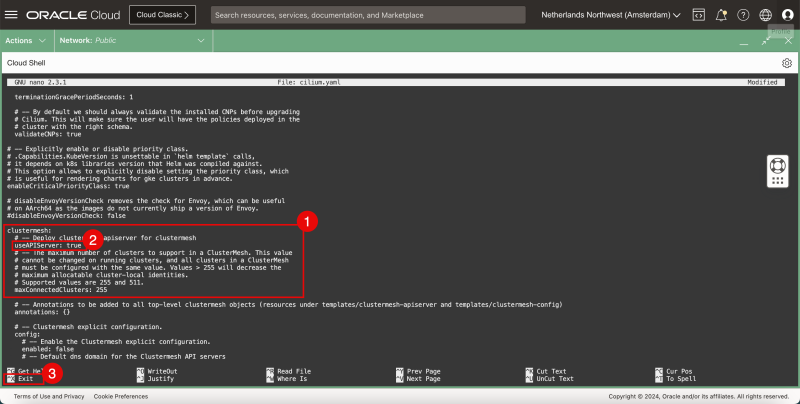

clustermesh:

useAPIServer: true

- Use the CNTRL + W keyboard shortcut to find a keyword/setting/section.

- In the containerRuntime section:

- change the integration type.

- In the Hubble > TLS section:

- disable TLS.

- In the Hubble > Relay section:

- enable relay.

- In the Hubble > UI section:

- enable the UI.

- In the IPAM section:

- set the mode to Kubernetes.

- In the Clustermesh section:

- set the Cluster API to true.

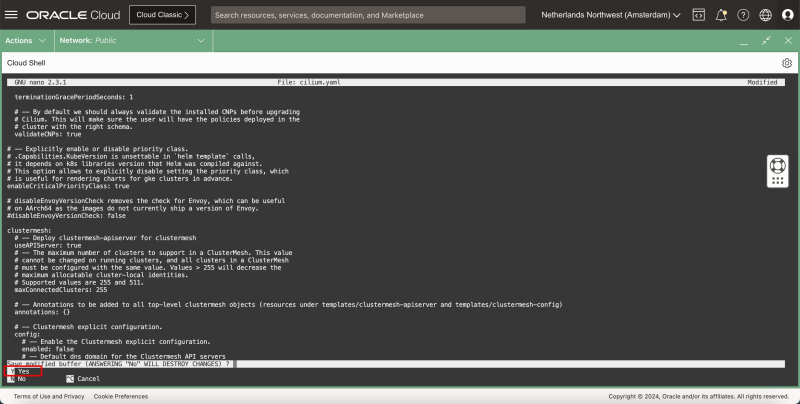

- Use the CNTRL + X keyboard shortcut to exit the nano editor.

- Type in Y (Yes) to save the YAML file.

- Leave the YAML file name default (for the save).

- When the file has been saved you return to the terminal.

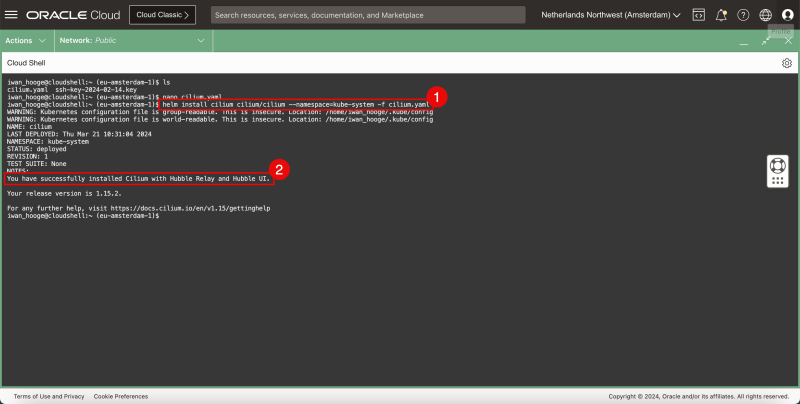

1. Use the following command to install Cilium:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ helm install cilium cilium/cilium --namespace=kube-system -f cilium.yaml

WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /home/iwan_hooge/.kube/config

WARNING: Kubernetes configuration file is world-readable. This is insecure. Location: /home/iwan_hooge/.kube/config

NAME: cilium

LAST DEPLOYED: Thu Mar 21 10:31:04 2024

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

You have successfully installed Cilium with Hubble Relay and Hubble UI.

Your release version is 1.15.2.

For any further help, visit https://docs.cilium.io/en/v1.15/gettinghelp

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

2. Notice the message that Cilium is successfully installed.

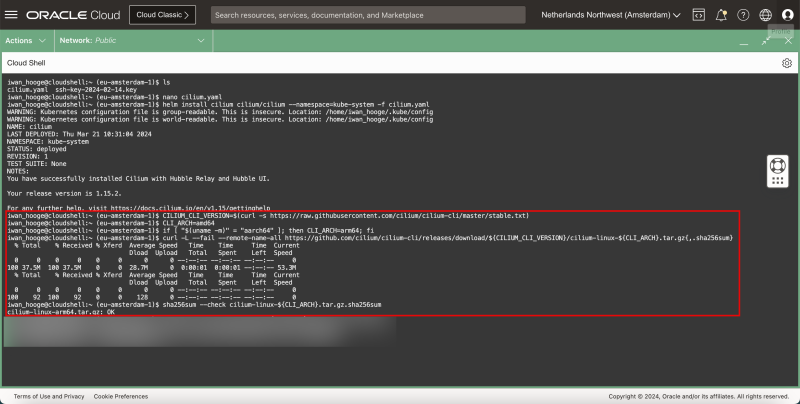

- To execute Cilium commands it is good to download the Cilium CLI with the following commands:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/master/stable.txt)

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ CLI_ARCH=amd64

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 37.5M 100 37.5M 0 0 28.7M 0 0:00:01 0:00:01 --:--:-- 53.3M

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 92 100 92 0 0 128 0 --:--:-- --:--:-- --:--:-- 0

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ sha256sum --check cilium-linux-${CLI_ARCH}.tar.gz.sha256sum

cilium-linux-arm64.tar.gz: OK

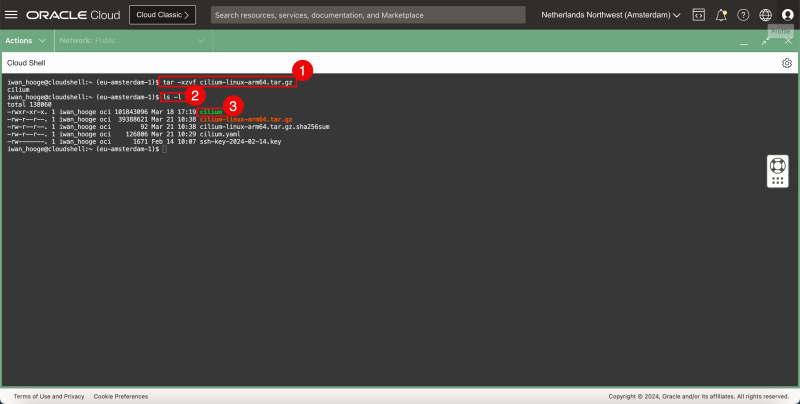

1. Issue the following command to uncompress the Cilium CLI application:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ sudo tar xzvf cilium-linux-${CLI_ARCH}.tar.gz

2. Issue this command to confirm if the Cilium CLI file is present:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ ls -l total 138060 -rw-r--r--. 1 iwan_hooge oci 101843096 Mar 18 17:19 cilium -rw-r--r--. 1 iwan_hooge oci 39388621 Mar 21 10:38 cilium-linux-arm64.tar.gz -rw-r--r--. 1 iwan_hooge oci 92 Mar 21 10:38 cilium-linux-arm64.tar.gz.sha256sum -rw-r--r--. 1 iwan_hooge oci 126806 Mar 21 10:29 cilium.yaml -rw-------. 1 iwan_hooge oci 1671 Feb 14 10:07 ssh-key-2024-02-14.key iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

3. After successful compression you will notice a file that is named “cillium” in the list.

- Issue the following command to remove the downloaded compressed file:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ rm cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

Now let’s use the Cilium CLI for the first time:

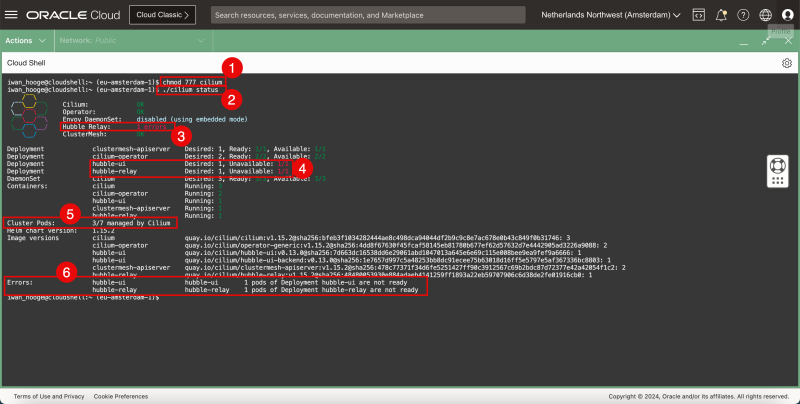

1. Issue the following command to make the Cilium CLI file executable:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ chmod 777 cilium

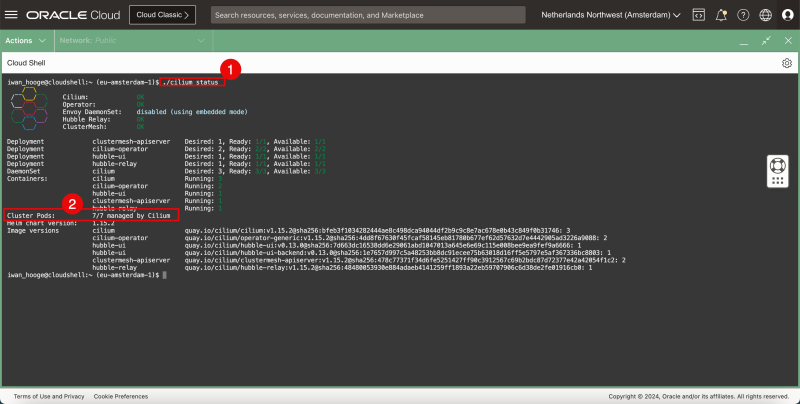

2. Issue the following command to check the status of the Kubernetes Cluster with Cilium:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ ./cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Envoy DaemonSet: disabled (using embedded mode)

\__/¯¯\__/ Hubble Relay: 1 errors

\__/ ClusterMesh: OK

Deployment clustermesh-apiserver Desired: 1, Ready: 1/1, Available: 1/1

Deployment cilium-operator Desired: 2, Ready: 2/2, Available: 2/2

Deployment hubble-ui Desired: 1, Unavailable: 1/1

Deployment hubble-relay Desired: 1, Unavailable: 1/1

DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

Containers: cilium Running: 3

cilium-operator Running: 2

hubble-ui Running: 1

clustermesh-apiserver Running: 1

hubble-relay Running: 1

Cluster Pods: 3/7 managed by Cilium

Helm chart version: 1.15.2

Image versions cilium quay.io/cilium/cilium:v1.15.2@sha256:bfeb3f1034282444ae8c498dca94044df2b9c9c8e7ac678e0b43c849f0b31746: 3

cilium-operator quay.io/cilium/operator-generic:v1.15.2@sha256:4dd8f67630f45fcaf58145eb81780b677ef62d57632d7e4442905ad3226a9088: 2

hubble-ui quay.io/cilium/hubble-ui:v0.13.0@sha256:7d663dc16538dd6e29061abd1047013a645e6e69c115e008bee9ea9fef9a6666: 1

hubble-ui quay.io/cilium/hubble-ui-backend:v0.13.0@sha256:1e7657d997c5a48253bb8dc91ecee75b63018d16ff5e5797e5af367336bc8803: 1

clustermesh-apiserver quay.io/cilium/clustermesh-apiserver:v1.15.2@sha256:478c77371f34d6fe5251427ff90c3912567c69b2bdc87d72377e42a42054f1c2: 2

hubble-relay quay.io/cilium/hubble-relay:v1.15.2@sha256:48480053930e884adaeb4141259ff1893a22eb59707906c6d38de2fe01916cb0: 1

Errors: hubble-ui hubble-ui 1 pods of Deployment hubble-ui are not ready

hubble-relay hubble-relay 1 pods of Deployment hubble-relay are not ready

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

- You will get some errors because some pods are not yet managed by Cilium because the pod started before Cilium was running.

3. This error is related to ClusterMesh.

4. This error is related to Hubble UI and Hubble Relay.

5. Notice that not all Cluster pods are managed by Cilium. Notice how only 3/7 pods are managed by Cilium. We need to ensure all of them are managed by cilium.

6. Notice some additional error messages related to the above errors.

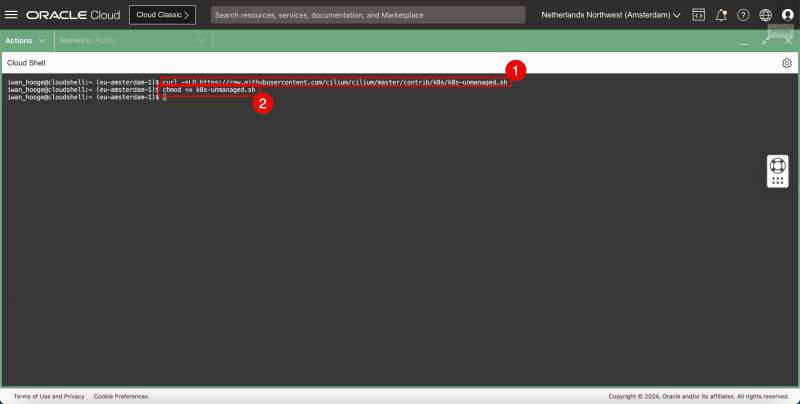

- Let’s check and allocate the pods that are currently not managed by Cilium. - Cilium is very helpful and provides a script to help you identify them. 1. Issue the following command to download the script:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ curl -sLO https://raw.githubusercontent.com/cilium/cilium/master/contrib/k8s/k8s-unmanaged.sh

2. Issue the following command to make this script executable:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ chmod +x k8s-unmanaged.sh

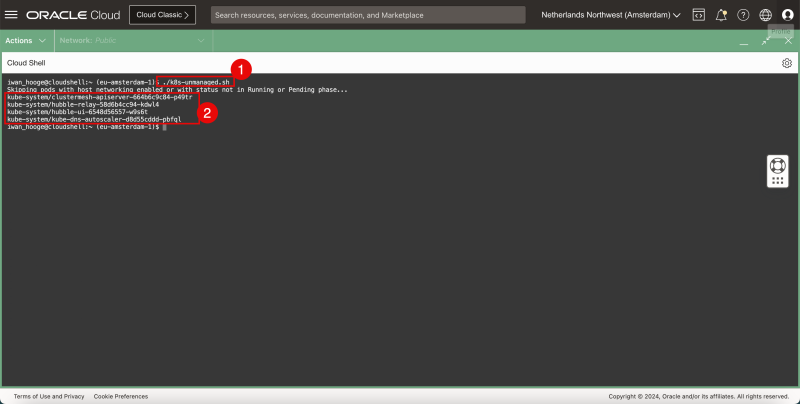

1. Issue the following command to execute this script (to detect pods that need additional attention):

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ ./k8s-unmanaged.sh

Skipping pods with host networking enabled or with status not in Running or Pending phase...

kube-system/clustermesh-apiserver-664b6c9c84-p49tr

kube-system/hubble-relay-58d6b4cc94-kdwl4

kube-system/hubble-ui-6548d56557-w9s6t

kube-system/kube-dns-autoscaler-d8d55cddd-pbfql

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

2. Pay attention to the pods that need attention.

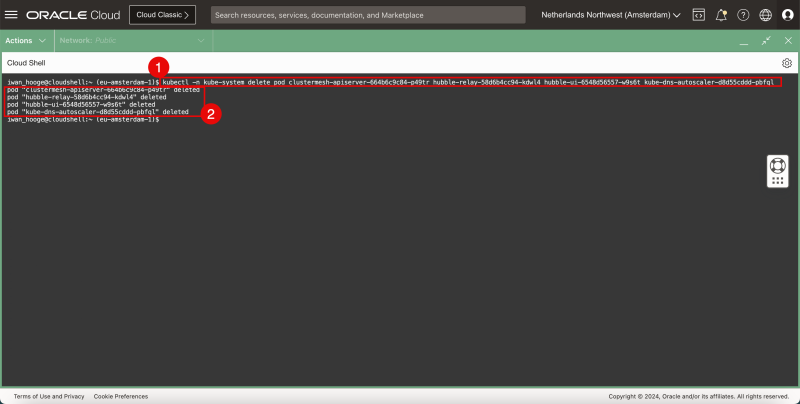

1. Issue the following command to delete the pods that need attention:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl -n kube-system delete pod clustermesh-apiserver-664b6c9c84-p49tr hubble-relay-58d6b4cc94-kdwl4 hubble-ui-6548d56557-w9s6t kube-dns-autoscaler-d8d55cddd-pbfql

pod "clustermesh-apiserver-664b6c9c84-p49tr" deleted

pod "hubble-relay-58d6b4cc94-kdwl4" deleted

pod "hubble-ui-6548d56557-w9s6t" deleted

pod "kube-dns-autoscaler-d8d55cddd-pbfql" deleted

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

2. Notice the confirmation of the deleted pods:

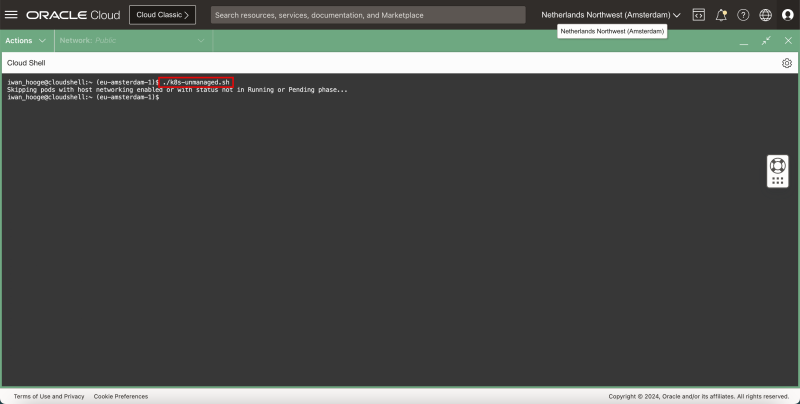

- Issue the following command to execute this script (to detect pods that need additional attention) again:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ ./k8s-unmanaged.sh

Skipping pods with host networking enabled or with status not in Running or Pending phase...

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

- Notice that there are no pods that need attention anymore which means the pods are now recreated with Cilium networking enabled.

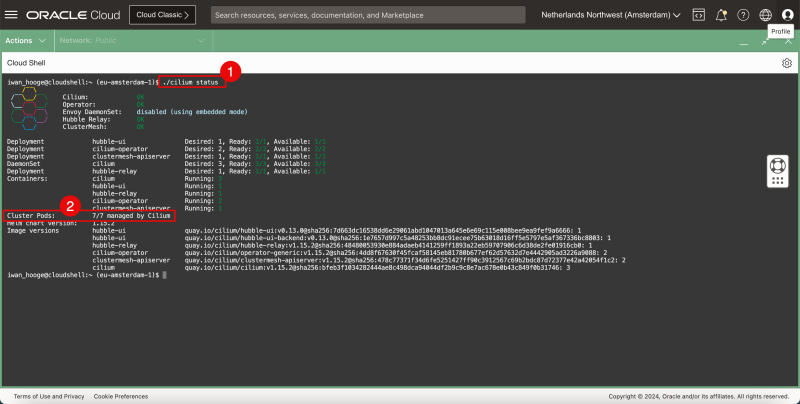

1. Issue the following command to check the status of the Kubernetes Cluster with Cilium:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ ./cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Envoy DaemonSet: disabled (using embedded mode)

\__/¯¯\__/ Hubble Relay: OK

\__/ ClusterMesh: OK

Deployment hubble-ui Desired: 1, Ready: 1/1, Available: 1/1

Deployment cilium-operator Desired: 2, Ready: 2/2, Available: 2/2

Deployment clustermesh-apiserver Desired: 1, Ready: 1/1, Available: 1/1

DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1

Containers: cilium Running: 3

hubble-ui Running: 1

hubble-relay Running: 1

cilium-operator Running: 2

clustermesh-apiserver Running: 1

Cluster Pods: 7/7 managed by Cilium

Helm chart version: 1.15.2

Image versions hubble-ui quay.io/cilium/hubble-ui:v0.13.0@sha256:7d663dc16538dd6e29061abd1047013a645e6e69c115e008bee9ea9fef9a6666: 1

hubble-ui quay.io/cilium/hubble-ui-backend:v0.13.0@sha256:1e7657d997c5a48253bb8dc91ecee75b63018d16ff5e5797e5af367336bc8803: 1

hubble-relay quay.io/cilium/hubble-relay:v1.15.2@sha256:48480053930e884adaeb4141259ff1893a22eb59707906c6d38de2fe01916cb0: 1

cilium-operator quay.io/cilium/operator-generic:v1.15.2@sha256:4dd8f67630f45fcaf58145eb81780b677ef62d57632d7e4442905ad3226a9088: 2

clustermesh-apiserver quay.io/cilium/clustermesh-apiserver:v1.15.2@sha256:478c77371f34d6fe5251427ff90c3912567c69b2bdc87d72377e42a42054f1c2: 2

cilium quay.io/cilium/cilium:v1.15.2@sha256:bfeb3f1034282444ae8c498dca94044df2b9c9c8e7ac678e0b43c849f0b31746: 3

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

2. Notice that all Cluster pods are managed by Cilium.

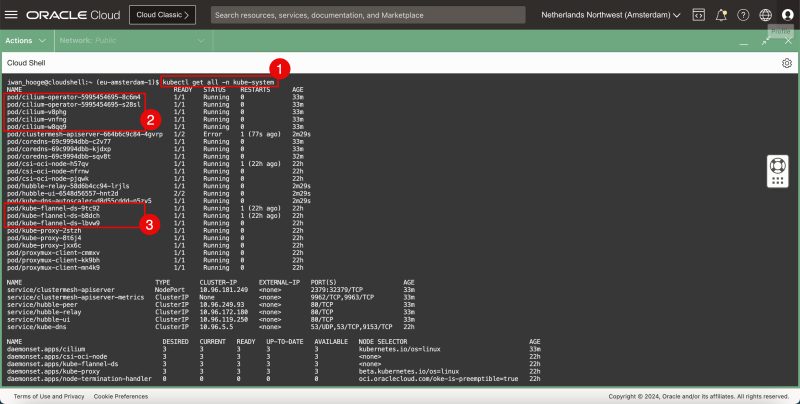

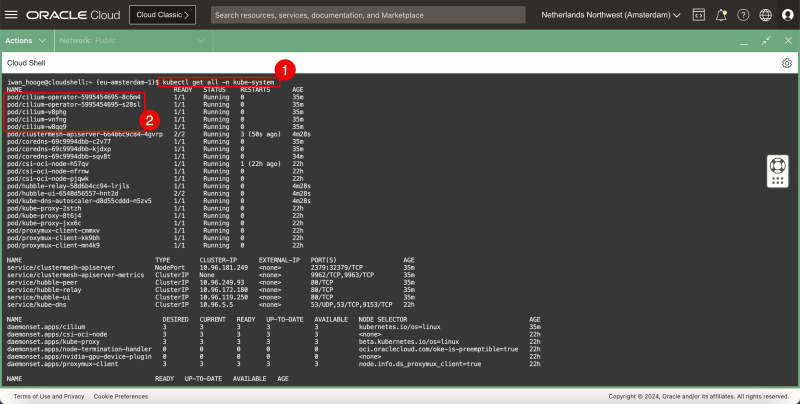

1. Issue the following command to verify the currently deployed CNI plugins (Flannel and Cilium):

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get all -n kube-system

NAME READY STATUS RESTARTS AGE

pod/cilium-operator-5995454695-8c6m4 1/1 Running 0 33m

pod/cilium-operator-5995454695-s28sl 1/1 Running 0 33m

pod/cilium-v8phg 1/1 Running 0 33m

pod/cilium-vnfng 1/1 Running 0 33m

pod/cilium-w8qq9 1/1 Running 0 33m

pod/clustermesh-apiserver-664b6c9c84-4gvrp 1/2 Error 1 (77s ago) 2m29s

pod/coredns-69c9994dbb-c2v77 1/1 Running 0 33m

pod/coredns-69c9994dbb-kjdxp 1/1 Running 0 33m

pod/coredns-69c9994dbb-sqv8t 1/1 Running 0 32m

pod/csi-oci-node-h57qv 1/1 Running 1 (22h ago) 22h

pod/csi-oci-node-nfrnw 1/1 Running 0 22h

pod/csi-oci-node-pjqwk 1/1 Running 0 22h

pod/hubble-relay-58d6b4cc94-lrjls 1/1 Running 0 2m29s

pod/hubble-ui-6548d56557-hnt2d 2/2 Running 0 2m29s

pod/kube-dns-autoscaler-d8d55cddd-n5zv5 1/1 Running 0 2m29s

pod/kube-flannel-ds-9tc92 1/1 Running 1 (22h ago) 22h

pod/kube-flannel-ds-b8dch 1/1 Running 1 (22h ago) 22h

pod/kube-flannel-ds-lbvw9 1/1 Running 0 22h

pod/kube-proxy-2stzh 1/1 Running 0 22h

pod/kube-proxy-8t6j4 1/1 Running 0 22h

pod/kube-proxy-jxx6c 1/1 Running 0 22h

pod/proxymux-client-cmmxv 1/1 Running 0 22h

pod/proxymux-client-kk9bh 1/1 Running 0 22h

pod/proxymux-client-mn4k9 1/1 Running 0 22h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/clustermesh-apiserver NodePort 10.96.181.249 <none> 2379:32379/TCP 33m

service/clustermesh-apiserver-metrics ClusterIP None <none> 9962/TCP,9963/TCP 33m

service/hubble-peer ClusterIP 10.96.249.93 <none> 80/TCP 33m

service/hubble-relay ClusterIP 10.96.172.180 <none> 80/TCP 33m

service/hubble-ui ClusterIP 10.96.119.250 <none> 80/TCP 33m

service/kube-dns ClusterIP 10.96.5.5 <none> 53/UDP,53/TCP,9153/TCP 22h

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/cilium 3 3 3 3 3 kubernetes.io/os=linux 33m

daemonset.apps/csi-oci-node 3 3 3 3 3 <none> 22h

daemonset.apps/kube-flannel-ds 3 3 3 3 3 <none> 22h

daemonset.apps/kube-proxy 3 3 3 3 3 beta.kubernetes.io/os=linux 22h

daemonset.apps/node-termination-handler 0 0 0 0 0 oci.oraclecloud.com/oke-is-preemptible=true 22h

daemonset.apps/nvidia-gpu-device-plugin 0 0 0 0 0 <none> 22h

daemonset.apps/proxymux-client 3 3 3 3 3 node.info.ds_proxymux_client=true 22h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cilium-operator 2/2 2 2 33m

deployment.apps/clustermesh-apiserver 0/1 1 0 33m

deployment.apps/coredns 3/3 3 3 22h

deployment.apps/hubble-relay 1/1 1 1 33m

deployment.apps/hubble-ui 1/1 1 1 33m

deployment.apps/kube-dns-autoscaler 1/1 1 1 22h

NAME DESIRED CURRENT READY AGE

replicaset.apps/cilium-operator-5995454695 2 2 2 33m

replicaset.apps/clustermesh-apiserver-664b6c9c84 1 1 0 33m

replicaset.apps/coredns-69c9994dbb 3 3 3 22h

replicaset.apps/hubble-relay-58d6b4cc94 1 1 1 33m

replicaset.apps/hubble-ui-6548d56557 1 1 1 33m

replicaset.apps/kube-dns-autoscaler-d8d55cddd 1 1 1 22h

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

2. Notice that the Cilium CNI plugin is present.

3. Notice that the Flannel CNI plugin is also still present.

This is a visual representation of the Kubernetes worker nodes with the Flannel and Cilium CNI installed.

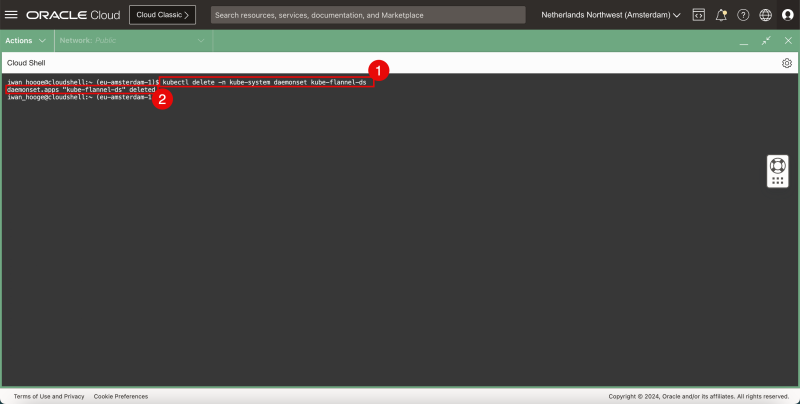

Let's now delete the Flannel plugin.

1. Issue the following command to delete the Flannel CNI.

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl delete -n kube-system daemonset kube-flannel-ds

daemonset.apps "kube-flannel-ds" deleted

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

2. Notice the message that the Flannel CNI has been successfully deleted.

1. Issue the following command to verify the currently deployed CNI plugins (Cilium):

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get all -n kube-system

NAME READY STATUS RESTARTS AGE

pod/cilium-operator-5995454695-8c6m4 1/1 Running 0 35m

pod/cilium-operator-5995454695-s28sl 1/1 Running 0 35m

pod/cilium-v8phg 1/1 Running 0 35m

pod/cilium-vnfng 1/1 Running 0 35m

pod/cilium-w8qq9 1/1 Running 0 35m

pod/clustermesh-apiserver-664b6c9c84-4gvrp 2/2 Running 3 (50s ago) 4m28s

pod/coredns-69c9994dbb-c2v77 1/1 Running 0 35m

pod/coredns-69c9994dbb-kjdxp 1/1 Running 0 35m

pod/coredns-69c9994dbb-sqv8t 1/1 Running 0 34m

pod/csi-oci-node-h57qv 1/1 Running 1 (22h ago) 22h

pod/csi-oci-node-nfrnw 1/1 Running 0 22h

pod/csi-oci-node-pjqwk 1/1 Running 0 22h

pod/hubble-relay-58d6b4cc94-lrjls 1/1 Running 0 4m28s

pod/hubble-ui-6548d56557-hnt2d 2/2 Running 0 4m28s

pod/kube-dns-autoscaler-d8d55cddd-n5zv5 1/1 Running 0 4m28s

pod/kube-proxy-2stzh 1/1 Running 0 22h

pod/kube-proxy-8t6j4 1/1 Running 0 22h

pod/kube-proxy-jxx6c 1/1 Running 0 22h

pod/proxymux-client-cmmxv 1/1 Running 0 22h

pod/proxymux-client-kk9bh 1/1 Running 0 22h

pod/proxymux-client-mn4k9 1/1 Running 0 22h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/clustermesh-apiserver NodePort 10.96.181.249 <none> 2379:32379/TCP 35m

service/clustermesh-apiserver-metrics ClusterIP None <none> 9962/TCP,9963/TCP 35m

service/hubble-peer ClusterIP 10.96.249.93 <none> 80/TCP 35m

service/hubble-relay ClusterIP 10.96.172.180 <none> 80/TCP 35m

service/hubble-ui ClusterIP 10.96.119.250 <none> 80/TCP 35m

service/kube-dns ClusterIP 10.96.5.5 <none> 53/UDP,53/TCP,9153/TCP 22h

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/cilium 3 3 3 3 3 kubernetes.io/os=linux 35m

daemonset.apps/csi-oci-node 3 3 3 3 3 <none> 22h

daemonset.apps/kube-proxy 3 3 3 3 3 beta.kubernetes.io/os=linux 22h

daemonset.apps/node-termination-handler 0 0 0 0 0 oci.oraclecloud.com/oke-is-preemptible=true 22h

daemonset.apps/nvidia-gpu-device-plugin 0 0 0 0 0 <none> 22h

daemonset.apps/proxymux-client 3 3 3 3 3 node.info.ds_proxymux_client=true 22h

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cilium-operator 2/2 2 2 35m

deployment.apps/clustermesh-apiserver 1/1 1 1 35m

deployment.apps/coredns 3/3 3 3 22h

deployment.apps/hubble-relay 1/1 1 1 35m

deployment.apps/hubble-ui 1/1 1 1 35m

deployment.apps/kube-dns-autoscaler 1/1 1 1 22h

NAME DESIRED CURRENT READY AGE

replicaset.apps/cilium-operator-5995454695 2 2 2 35m

replicaset.apps/clustermesh-apiserver-664b6c9c84 1 1 1 35m

replicaset.apps/coredns-69c9994dbb 3 3 3 22h

replicaset.apps/hubble-relay-58d6b4cc94 1 1 1 35m

replicaset.apps/hubble-ui-6548d56557 1 1 1 35m

replicaset.apps/kube-dns-autoscaler-d8d55cddd 1 1 1 22h

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

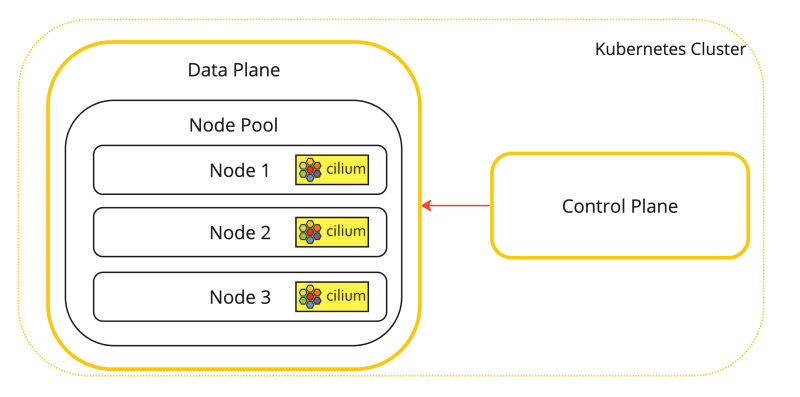

2. Notice that the Cilium CNI plugin is present. Notice that the Flannel CNI plugin was removed.

This is a visual representation of the Kubernetes worker nodes with the Cilium CNI installed.

- An additional command that can be used to verify where Cilium is installed:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl -n kube-system get pods -l k8s-app=cilium NAME READY STATUS RESTARTS AGE cilium-v8phg 1/1 Running 0 22h cilium-vnfng 1/1 Running 0 22h cilium-w8qq9 1/1 Running 0 22h iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

1. Issue the following command to check the status of the Kubernetes Cluster with Cilium:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ ./cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Envoy DaemonSet: disabled (using embedded mode)

\__/¯¯\__/ Hubble Relay: OK

\__/ ClusterMesh: OK

Deployment clustermesh-apiserver Desired: 1, Ready: 1/1, Available: 1/1

Deployment cilium-operator Desired: 2, Ready: 2/2, Available: 2/2

Deployment hubble-ui Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-relay Desired: 1, Ready: 1/1, Available: 1/1

DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

Containers: cilium Running: 3

cilium-operator Running: 2

hubble-ui Running: 1

clustermesh-apiserver Running: 1

hubble-relay Running: 1

Cluster Pods: 7/7 managed by Cilium

Helm chart version: 1.15.2

Image versions cilium quay.io/cilium/cilium:v1.15.2@sha256:bfeb3f1034282444ae8c498dca94044df2b9c9c8e7ac678e0b43c849f0b31746: 3

cilium-operator quay.io/cilium/operator-generic:v1.15.2@sha256:4dd8f67630f45fcaf58145eb81780b677ef62d57632d7e4442905ad3226a9088: 2

hubble-ui quay.io/cilium/hubble-ui:v0.13.0@sha256:7d663dc16538dd6e29061abd1047013a645e6e69c115e008bee9ea9fef9a6666: 1

hubble-ui quay.io/cilium/hubble-ui-backend:v0.13.0@sha256:1e7657d997c5a48253bb8dc91ecee75b63018d16ff5e5797e5af367336bc8803: 1

clustermesh-apiserver quay.io/cilium/clustermesh-apiserver:v1.15.2@sha256:478c77371f34d6fe5251427ff90c3912567c69b2bdc87d72377e42a42054f1c2: 2

hubble-relay quay.io/cilium/hubble-relay:v1.15.2@sha256:48480053930e884adaeb4141259ff1893a22eb59707906c6d38de2fe01916cb0: 1

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

2. Notice that all still Cluster pods are managed by Cilium.

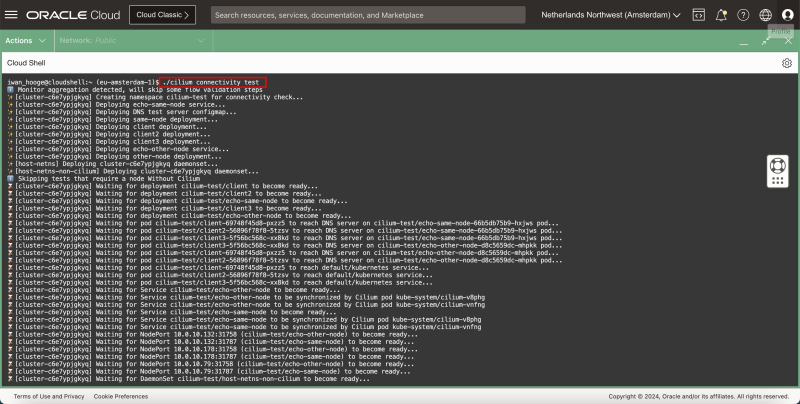

- Cilium has a built-in connectivity test that is capable of testing networking inside the Kubernetes cluster. - Issue the following command to perform the Cilium connectivity tests:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ ./cilium connectivity test

ℹ️ Monitor aggregation detected, will skip some flow validation steps

✨ [cluster-c6e7ypjgkyq] Creating namespace cilium-test for connectivity check...

✨ [cluster-c6e7ypjgkyq] Deploying echo-same-node service...

✨ [cluster-c6e7ypjgkyq] Deploying DNS test server configmap...

✨ [cluster-c6e7ypjgkyq] Deploying same-node deployment...

✨ [cluster-c6e7ypjgkyq] Deploying client deployment...

✨ [cluster-c6e7ypjgkyq] Deploying client2 deployment...

✨ [cluster-c6e7ypjgkyq] Deploying client3 deployment...

✨ [cluster-c6e7ypjgkyq] Deploying echo-other-node service...

✨ [cluster-c6e7ypjgkyq] Deploying other-node deployment...

✨ [host-netns] Deploying cluster-c6e7ypjgkyq daemonset...

✨ [host-netns-non-cilium] Deploying cluster-c6e7ypjgkyq daemonset...

ℹ️ Skipping tests that require a node Without Cilium

⌛ [cluster-c6e7ypjgkyq] Waiting for deployment cilium-test/client to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for deployment cilium-test/client2 to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for deployment cilium-test/echo-same-node to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for deployment cilium-test/client3 to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for deployment cilium-test/echo-other-node to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for pod cilium-test/client-69748f45d8-pxzz5 to reach DNS server on cilium-test/echo-same-node-66b5db75b9-hxjws pod...

⌛ [cluster-c6e7ypjgkyq] Waiting for pod cilium-test/client2-56896f78f8-5tzsv to reach DNS server on cilium-test/echo-same-node-66b5db75b9-hxjws pod...

⌛ [cluster-c6e7ypjgkyq] Waiting for pod cilium-test/client3-5f56bc568c-xx8kd to reach DNS server on cilium-test/echo-same-node-66b5db75b9-hxjws pod...

⌛ [cluster-c6e7ypjgkyq] Waiting for pod cilium-test/client3-5f56bc568c-xx8kd to reach DNS server on cilium-test/echo-other-node-d8c5659dc-mhpkk pod...

⌛ [cluster-c6e7ypjgkyq] Waiting for pod cilium-test/client-69748f45d8-pxzz5 to reach DNS server on cilium-test/echo-other-node-d8c5659dc-mhpkk pod...

⌛ [cluster-c6e7ypjgkyq] Waiting for pod cilium-test/client2-56896f78f8-5tzsv to reach DNS server on cilium-test/echo-other-node-d8c5659dc-mhpkk pod...

⌛ [cluster-c6e7ypjgkyq] Waiting for pod cilium-test/client-69748f45d8-pxzz5 to reach default/kubernetes service...

⌛ [cluster-c6e7ypjgkyq] Waiting for pod cilium-test/client2-56896f78f8-5tzsv to reach default/kubernetes service...

⌛ [cluster-c6e7ypjgkyq] Waiting for pod cilium-test/client3-5f56bc568c-xx8kd to reach default/kubernetes service...

⌛ [cluster-c6e7ypjgkyq] Waiting for Service cilium-test/echo-other-node to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for Service cilium-test/echo-other-node to be synchronized by Cilium pod kube-system/cilium-v8phg

⌛ [cluster-c6e7ypjgkyq] Waiting for Service cilium-test/echo-other-node to be synchronized by Cilium pod kube-system/cilium-vnfng

⌛ [cluster-c6e7ypjgkyq] Waiting for Service cilium-test/echo-same-node to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for Service cilium-test/echo-same-node to be synchronized by Cilium pod kube-system/cilium-v8phg

⌛ [cluster-c6e7ypjgkyq] Waiting for Service cilium-test/echo-same-node to be synchronized by Cilium pod kube-system/cilium-vnfng

⌛ [cluster-c6e7ypjgkyq] Waiting for NodePort 10.0.10.132:31758 (cilium-test/echo-other-node) to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for NodePort 10.0.10.132:31787 (cilium-test/echo-same-node) to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for NodePort 10.0.10.178:31758 (cilium-test/echo-other-node) to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for NodePort 10.0.10.178:31787 (cilium-test/echo-same-node) to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for NodePort 10.0.10.79:31758 (cilium-test/echo-other-node) to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for NodePort 10.0.10.79:31787 (cilium-test/echo-same-node) to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for DaemonSet cilium-test/host-netns-non-cilium to become ready...

⌛ [cluster-c6e7ypjgkyq] Waiting for DaemonSet cilium-test/host-netns to become ready...

ℹ️ Skipping IPCache check

🔭 Enabling Hubble telescope...

⚠️ Unable to contact Hubble Relay, disabling Hubble telescope and flow validation: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing: dial tcp 127.0.0.1:4245: connect: connection refused"

ℹ️ Expose Relay locally with:

cilium hubble enable

cilium hubble port-forward&

ℹ️ Cilium version: 1.15.2

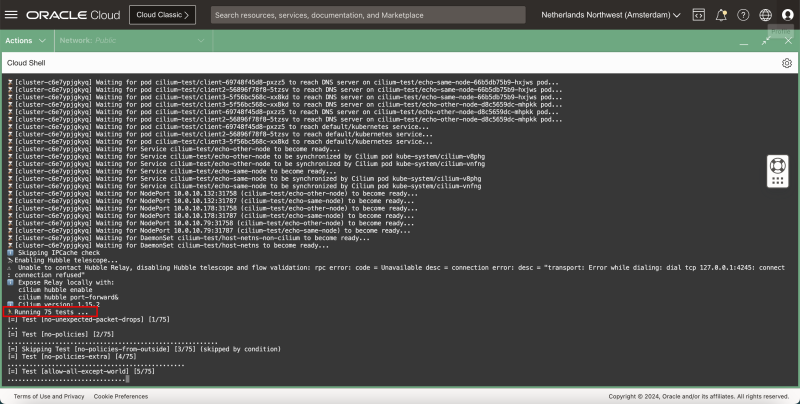

🏃 Running 75 tests ...

[=] Test [no-unexpected-packet-drops] [1/75]

...

[=] Test [no-policies] [2/75]

.........................................................

[=] Skipping Test [no-policies-from-outside] [3/75] (skipped by condition)

[=] Test [no-policies-extra] [4/75]

................................................

[=] Test [allow-all-except-world] [5/75]

....................................

[=] Test [client-ingress] [6/75]

......

[=] Test [client-ingress-knp] [7/75]

......

[=] Test [allow-all-with-metrics-check] [8/75]

......

[=] Test [all-ingress-deny] [9/75]

............

[=] Skipping Test [all-ingress-deny-from-outside] [10/75] (skipped by condition)

[=] Test [all-ingress-deny-knp] [11/75]

............

[=] Test [all-egress-deny] [12/75]

........................

[=] Test [all-egress-deny-knp] [13/75]

........................

[=] Test [all-entities-deny] [14/75]

............

[=] Test [cluster-entity] [15/75]

...

[=] Skipping Test [cluster-entity-multi-cluster] [16/75] (skipped by condition)

[=] Test [host-entity-egress] [17/75]

..................

[=] Test [host-entity-ingress] [18/75]

......

[=] Test [echo-ingress] [19/75]

......

[=] Skipping Test [echo-ingress-from-outside] [20/75] (skipped by condition)

[=] Test [echo-ingress-knp] [21/75]

......

[=] Test [client-ingress-icmp] [22/75]

......

[=] Test [client-egress] [23/75]

......

[=] Test [client-egress-knp] [24/75]

......

[=] Test [client-egress-expression] [25/75]

......

[=] Test [client-egress-expression-knp] [26/75]

......

[=] Test [client-with-service-account-egress-to-echo] [27/75]

......

[=] Test [client-egress-to-echo-service-account] [28/75]

......

[=] Test [to-entities-world] [29/75]

.........

[=] Test [to-cidr-external] [30/75]

......

[=] Test [to-cidr-external-knp] [31/75]

......

[=] Skipping Test [from-cidr-host-netns] [32/75] (skipped by condition)

[=] Test [echo-ingress-from-other-client-deny] [33/75]

..........

[=] Test [client-ingress-from-other-client-icmp-deny] [34/75]

............

[=] Test [client-egress-to-echo-deny] [35/75]

............

[=] Test [client-ingress-to-echo-named-port-deny] [36/75]

....

[=] Test [client-egress-to-echo-expression-deny] [37/75]

....

[=] Test [client-with-service-account-egress-to-echo-deny] [38/75]

....

[=] Test [client-egress-to-echo-service-account-deny] [39/75]

..

[=] Test [client-egress-to-cidr-deny] [40/75]

......

[=] Test [client-egress-to-cidr-deny-default] [41/75]

......

[=] Test [health] [42/75]

...

[=] Skipping Test [north-south-loadbalancing] [43/75] (Feature node-without-cilium is disabled)

[=] Test [pod-to-pod-encryption] [44/75]

.

[=] Test [node-to-node-encryption] [45/75]

...

[=] Skipping Test [egress-gateway] [46/75] (skipped by condition)

[=] Skipping Test [egress-gateway-excluded-cidrs] [47/75] (Feature enable-ipv4-egress-gateway is disabled)

[=] Skipping Test [pod-to-node-cidrpolicy] [48/75] (Feature cidr-match-nodes is disabled)

[=] Skipping Test [north-south-loadbalancing-with-l7-policy] [49/75] (Feature node-without-cilium is disabled)

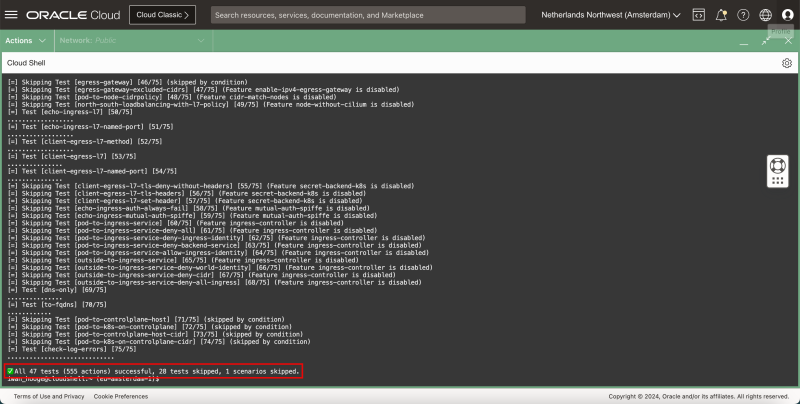

[=] Test [echo-ingress-l7] [50/75]

..................

[=] Test [echo-ingress-l7-named-port] [51/75]

..................

[=] Test [client-egress-l7-method] [52/75]

..................

[=] Test [client-egress-l7] [53/75]

...............

[=] Test [client-egress-l7-named-port] [54/75]

...............

[=] Skipping Test [client-egress-l7-tls-deny-without-headers] [55/75] (Feature secret-backend-k8s is disabled)

[=] Skipping Test [client-egress-l7-tls-headers] [56/75] (Feature secret-backend-k8s is disabled)

[=] Skipping Test [client-egress-l7-set-header] [57/75] (Feature secret-backend-k8s is disabled)

[=] Skipping Test [echo-ingress-auth-always-fail] [58/75] (Feature mutual-auth-spiffe is disabled)

[=] Skipping Test [echo-ingress-mutual-auth-spiffe] [59/75] (Feature mutual-auth-spiffe is disabled)

[=] Skipping Test [pod-to-ingress-service] [60/75] (Feature ingress-controller is disabled)

[=] Skipping Test [pod-to-ingress-service-deny-all] [61/75] (Feature ingress-controller is disabled)

[=] Skipping Test [pod-to-ingress-service-deny-ingress-identity] [62/75] (Feature ingress-controller is disabled)

[=] Skipping Test [pod-to-ingress-service-deny-backend-service] [63/75] (Feature ingress-controller is disabled)

[=] Skipping Test [pod-to-ingress-service-allow-ingress-identity] [64/75] (Feature ingress-controller is disabled)

[=] Skipping Test [outside-to-ingress-service] [65/75] (Feature ingress-controller is disabled)

[=] Skipping Test [outside-to-ingress-service-deny-world-identity] [66/75] (Feature ingress-controller is disabled)

[=] Skipping Test [outside-to-ingress-service-deny-cidr] [67/75] (Feature ingress-controller is disabled)

[=] Skipping Test [outside-to-ingress-service-deny-all-ingress] [68/75] (Feature ingress-controller is disabled)

[=] Test [dns-only] [69/75]

...............

[=] Test [to-fqdns] [70/75]

............

[=] Skipping Test [pod-to-controlplane-host] [71/75] (skipped by condition)

[=] Skipping Test [pod-to-k8s-on-controlplane] [72/75] (skipped by condition)

[=] Skipping Test [pod-to-controlplane-host-cidr] [73/75] (skipped by condition)

[=] Skipping Test [pod-to-k8s-on-controlplane-cidr] [74/75] (skipped by condition)

[=] Test [check-log-errors] [75/75]

.............................

✅ All 47 tests (555 actions) successful, 28 tests skipped, 1 scenarios skipped.

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

- Notice that the test has determined to run 75 tests in my case.

- When the tests are finished a summary will be displayed.

STEP 03 - Deploy a sample application

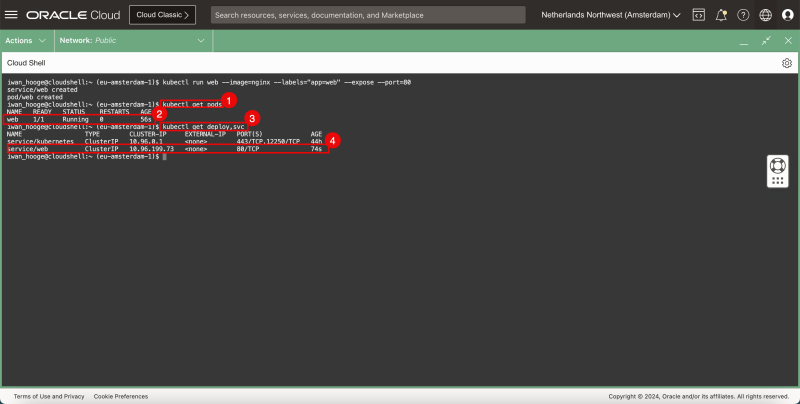

1. Issue the following command to deploy a sample web application and service:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl run web --image=nginx --labels="app=web" --expose --port=80 service/web created pod/web created iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

2. Notice that the application and service have been created successfully.

1. Issue the following command to verify the deployed application:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get pods

NAME READY STATUS RESTARTS AGE

web 1/1 Running 0 56s

2. Notice that the web application is running.

3. Issue the following command to verify the deployed service:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get deploy,svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP,12250/TCP 44h

service/web ClusterIP 10.96.199.73 <none> 80/TCP 74s

4. Notice that the web service is running.

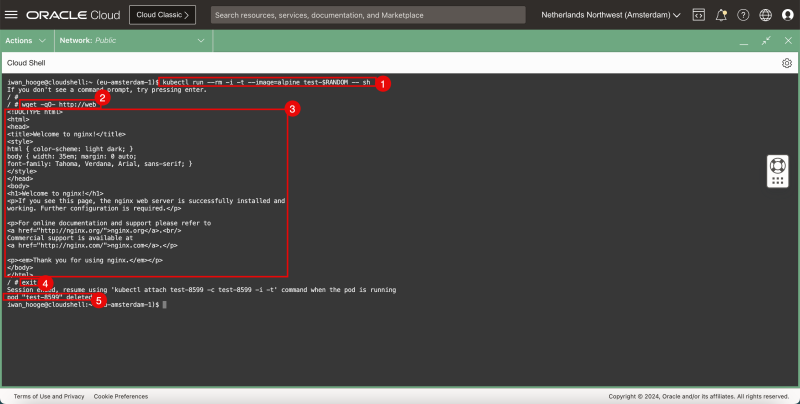

- There are multiple ways to test connectivity to the application, one way would be to open a browser and test if you can access the webpage. but when we do not have a browser available we can do another quick test by deploying a temporary pod.

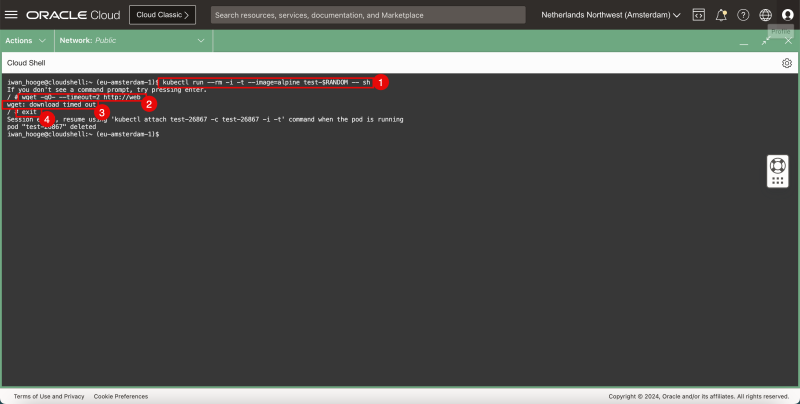

1. Issue the following command to deploy a sample pod to test the web application connectivity:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl run --rm -i -t --image=alpine test-$RANDOM -- sh

If you don't see a command prompt, try pressing enter.

/ #

2. Issue the following command to test connectivity to the web server using wget:

/ # wget -qO- http://web

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

3. Notice the HTML code that the web server returns, confirming the webserver and connectivity is working. 4. Issue this command to exit the temporary pod:

/ # exit

Session ended, resume using 'kubectl attach test-8599 -c test-8599 -i -t' command when the pod is running

pod "test-8599" deleted

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

5. Notice that the pod is deleted immediately after I exit the CLI.

STEP 04 - Configure Kubernetes Services of Type NetworkPolicy

One of the Network Security Services that the Cilium CNI is offering is the service of the type NetworkPolicy. This is a way of controlling the connectivity between pods by denying connectivity between two pods.

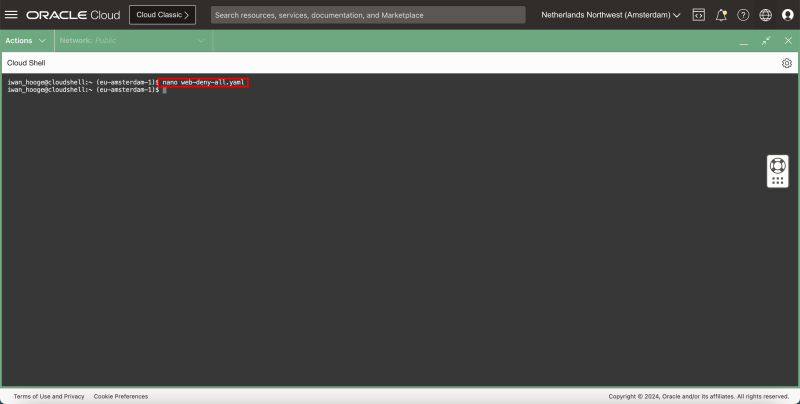

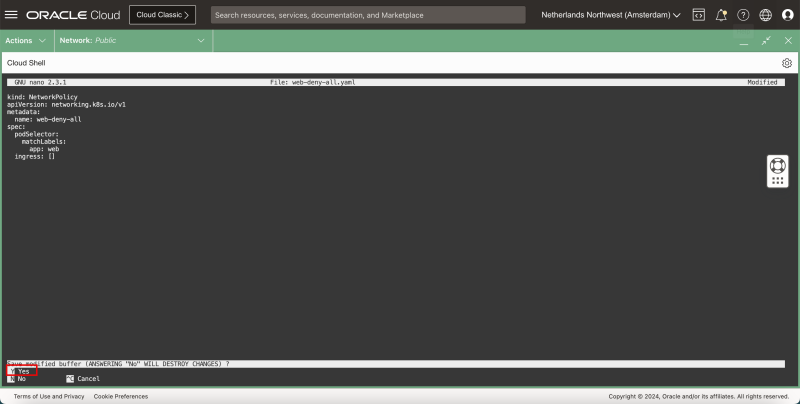

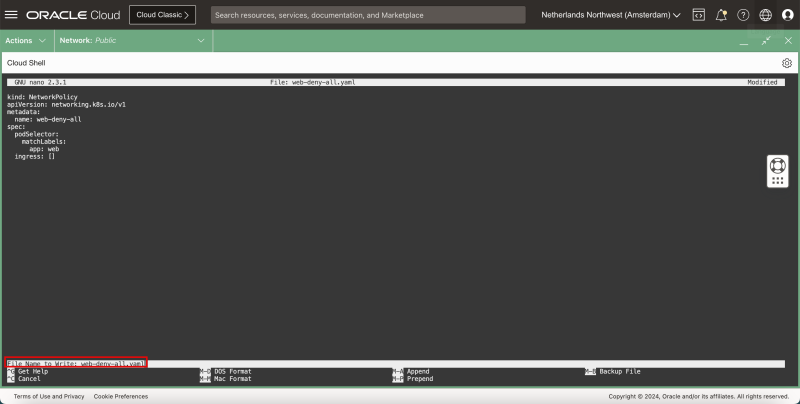

- Issue the following command to open the nano editor to create a new YAML file to implement a NetworkPolicy to deny all network traffic:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ nano web-deny-all.yaml

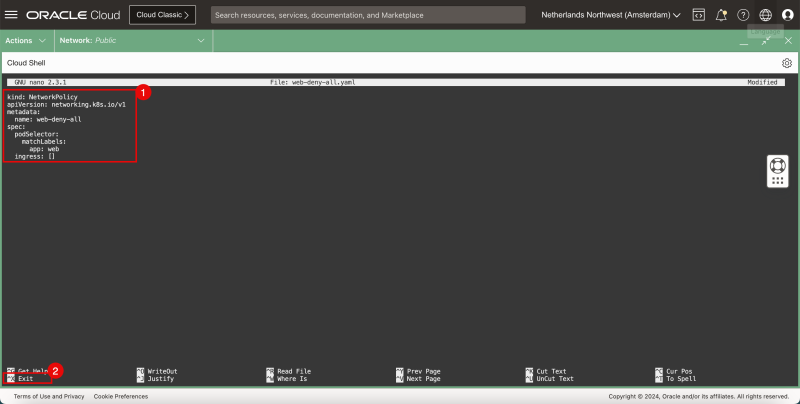

1. Paste the following content in the YAML file:

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: web-deny-all

spec:

podSelector:

matchLabels:

app: web

ingress: []

2. Use the CNTRL + X keyboard shortcut to exit the nano editor.

- Type in Y (Yes) to save the YAML file.

- Leave the YAML file name default (for the save).

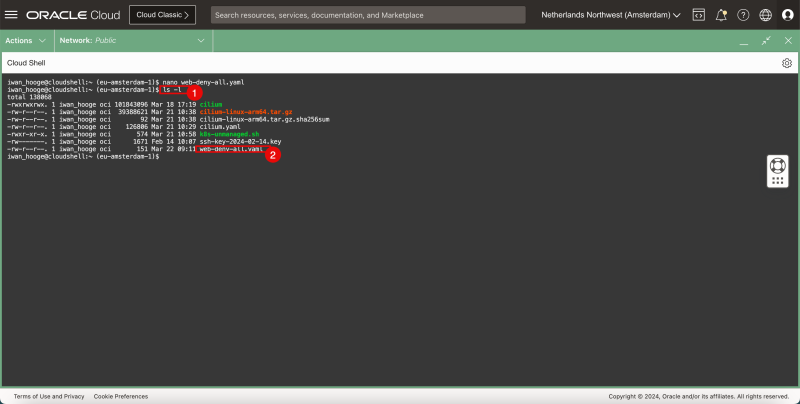

1. Issue the following command to verify if the new YAML file is created:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ ls -l

total 138068

-rwxrwxrwx. 1 iwan_hooge oci 101843096 Mar 18 17:19 cilium

-rw-r--r--. 1 iwan_hooge oci 39388621 Mar 21 10:38 cilium-linux-arm64.tar.gz

-rw-r--r--. 1 iwan_hooge oci 92 Mar 21 10:38 cilium-linux-arm64.tar.gz.sha256sum

-rw-r--r--. 1 iwan_hooge oci 126806 Mar 21 10:29 cilium.yaml

-rwxr-xr-x. 1 iwan_hooge oci 574 Mar 21 10:58 k8s-unmanaged.sh

-rw-------. 1 iwan_hooge oci 1671 Feb 14 10:07 ssh-key-2024-02-14.key

-rw-r--r--. 1 iwan_hooge oci 151 Mar 22 09:11 web-deny-all.yaml

2. Notice that the “web-deny-all.yaml” file is present.

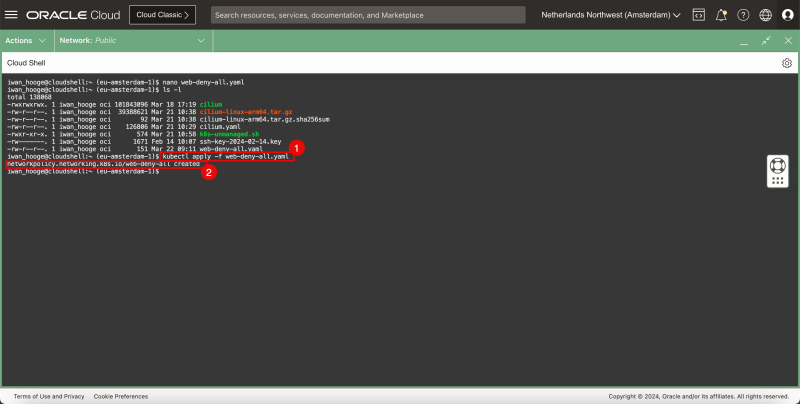

1. Issue the following command to implement the security policy.

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl apply -f web-deny-all.yaml

networkpolicy.networking.k8s.io/web-deny-all created

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

2. Notice that the security policy is created.

- Deploy a sample pod to test the web application connectivity again (after the NetworkPolicy has been deployed).

- Issue the following command to deploy a sample pod to test the web application connectivity:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl run --rm -i -t --image=alpine test-$RANDOM -- sh

If you don't see a command prompt, try pressing enter.

1. Issue the following command to test connectivity to the web server using wget:

/ # wget -qO- --timeout=2 http://web

wget: download timed out

2. Notice that the request is timing out. 3. Issue this command to exit the temporary pod:

/ # exit

Session ended, resume using 'kubectl attach test-26867 -c test-26867 -i -t' command when the pod is running

pod "test-26867" deleted

- Now let's remove the security policy, and perform the tests again.

1. Issue the following command to remove (delete) the security policy: 2. Notice that the security policy is removed. 3. Issue the following command to deploy a sample pod to test the web application connectivity:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl run --rm -i -t --image=alpine test-$RANDOM -- sh

If you don't see a command prompt, try pressing enter.

4. Issue the following command to test connectivity to the web server using wget: 5. Notice the HTML code that the web server returns, confirming the webserver and connectivity is working. 6. Issue this command to exit the temporary pod:

/ # exit

Session ended, resume using 'kubectl attach test-26248 -c test-26248 -i -t' command when the pod is running

pod "test-26248" deleted

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

7. Notice that the pod is deleted immediately after I exit the CLI.

STEP 05 - Removing the sample application

- To clean up the deployed web application issue the following command:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl delete service web --namespace default

service "web" deleted

- To clean up the deployed web service issue the following command:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl delete pods -l app=web --namespace default

pod "web" deleted

STEP 06 - Deploy a sample application and configure Kubernetes Services of Type LoadBalancer and clean up again

Now that we have tested the NetworkPolicy service (using Cilium), let's also test out the LoadBalancer Service (using Cilium).

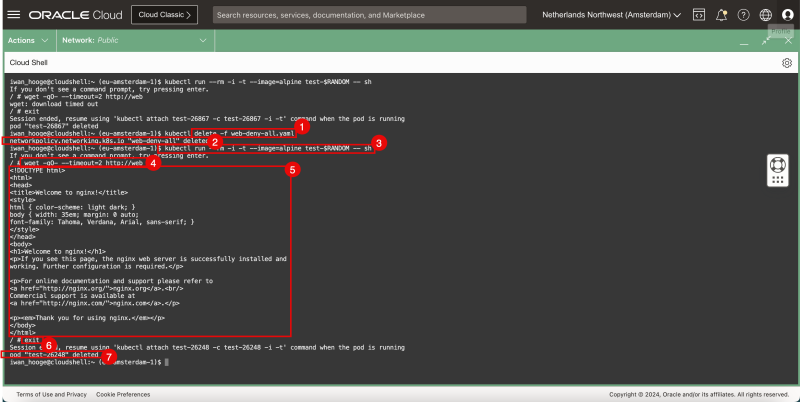

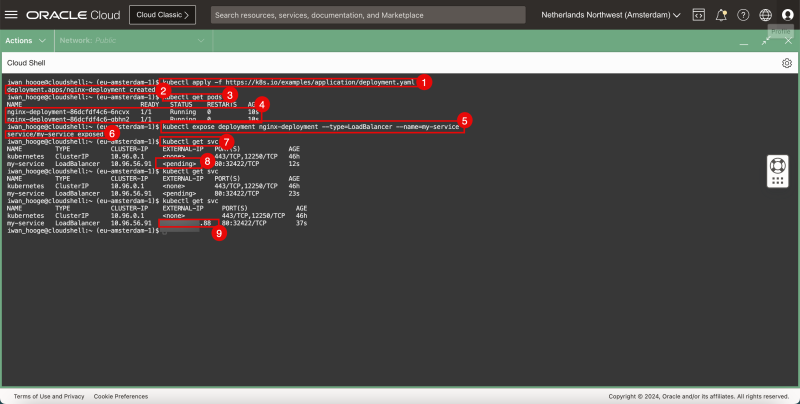

1. Issue the following command to deploy a new Application.

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl apply -f https://k8s.io/examples/application/deployment.yaml deployment.apps/nginx-deployment created

2. Verify that the application is successfully created. 3. Issue the following command to get the pods that are deployed.

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get pods NAME READY STATUS RESTARTS AGE nginx-deployment-86dcfdf4c6-6ncvx 1/1 Running 0 10s nginx-deployment-86dcfdf4c6-qbhn2 1/1 Running 0 10s

4. Review the deployed pods. 5. Issue the following command to expose the application to the LoadBalancer Service.

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl expose deployment nginx-deployment --type=LoadBalancer --name=my-service

service/my-service exposed

6. Verify that the service is successfully created and the application is successfully exposed. 7. Issue the following command to review the exposed services and the IP addresses.

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP,12250/TCP 46h

my-service LoadBalancer 10.96.56.91 <pending> 80:32422/TCP 12s

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP,12250/TCP 46h

my-service LoadBalancer 10.96.56.91 <pending> 80:32422/TCP 23s

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP,12250/TCP 46h

my-service LoadBalancer 10.96.56.91 XXX.XXX.XXX.88 80:32422/TCP 37s

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

8. Notice that while the service is created the external IP is pending. 9. Eventually after issuing the same command a few times a public IP address will be configured (ending with .88)

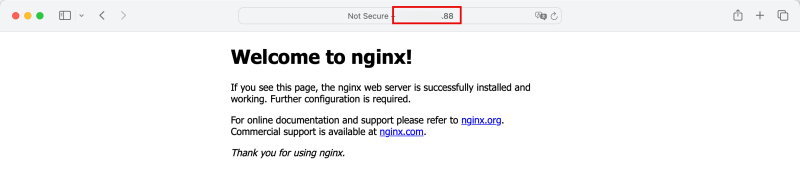

- Copy/Paste the public IP address (ending with .88) in an Internet Browser and verify if the deployed application (NGINX webserver with the default website) is reachable using the LoadBalancer service public IP address.

- A summary of the commands used can be found below:

kubectl get pods kubectl apply -f https://k8s.io/examples/application/deployment.yaml kubectl get pods kubectl get svc kubectl expose deployment nginx-deployment --type=LoadBalancer --name=my-service kubectl get svc

- Now that we have deployed the application and the LoadBalancer Service and the test was successful it is good to clean them up.

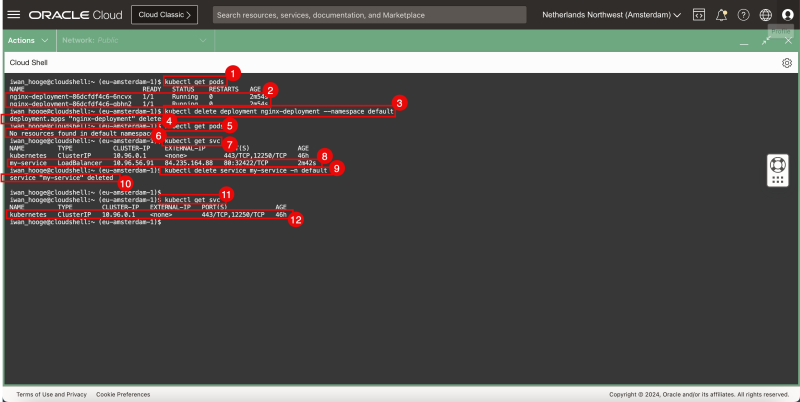

1. Issue the following command to get the pods that are deployed.

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get pods NAME READY STATUS RESTARTS AGE nginx-deployment-86dcfdf4c6-6ncvx 1/1 Running 0 2m54s nginx-deployment-86dcfdf4c6-qbhn2 1/1 Running 0 2m54s

2. Review the deployed pods. 3. To clean up the deployed web application issue the following command:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl delete deployment nginx-deployment --namespace default

deployment.apps "nginx-deployment" deleted

4. Confirm that the application is deleted. 5. Issue the following command to get the pods that are deployed.

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get pods

No resources found in default namespace.

6. Notice that the pods are no longer deployed and successfully deleted. 7. Issue the following command to get the services that are deployed.

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP,12250/TCP 46h

my-service LoadBalancer 10.96.56.91 XXX.XXX.XXX.88 80:32422/TCP 2m42s

8. Review the deployed services. 9. To clean up the deployed LoadBalancer services issue the following command:

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl delete service my-service -n default

service "my-service" deleted

10. Confirm that the service is deleted. 11. Issue the following command to get the services that are deployed.

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP,12250/TCP 46h

iwan_hooge@cloudshell:~ (eu-amsterdam-1)$

12. Notice that the LoadBalancer service is no longer deployed and successfully deleted.

- A summary of the commands used can be found below:

kubectl get pods kubectl delete service my-service -n default kubectl get pods kubectl get svc kubectl delete deployment nginx-deployment --namespace default kubectl get svc

Conclusion

In this tutorial, we have deployed a new Kubernetes Cluster on the Oracle Kubernetes Engine (OKE) platform using the custom create method. During this deployment, we selected the Flannel CNI plugin. We deployed the Cilium CNI plugin and removed the Flannel CNI plugin so that Cilium was the main CNI plugin for network (security) services for our Cloud Native applications. We then used the Cilium CNI plugin to deploy and test a NetworkPolicy and LoadBalancer service.